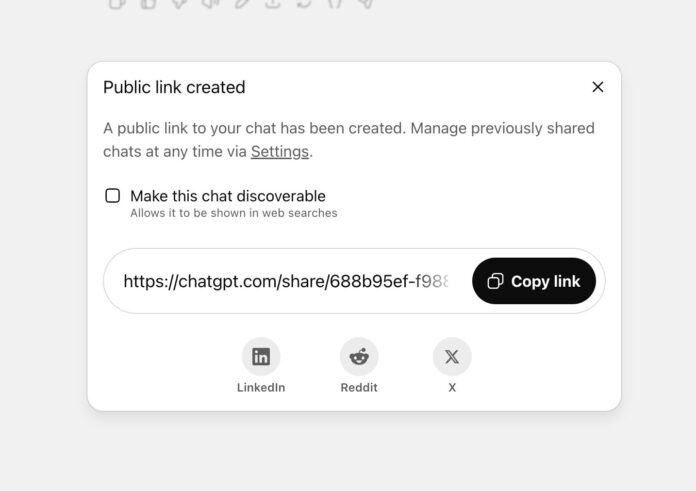

OpenAI recently removed a feature that unintentionally allowed users’ ChatGPT conversations to be searchable on Google, revealing sensitive information. Users had the ability to make their chats public, but many failed to understand the implications of this opt-in option. Consequently, private discussions containing names, job roles, and even internal corporate strategies were indexed by search engines, raising serious privacy concerns. Though OpenAI responded swiftly to the emerging issue, the incident emphasizes the risks associated with AI tools in professional settings. Experts recommend that users regularly check their ChatGPT settings to manage shared links and protect confidential information. The situation highlights the importance of prioritizing privacy in AI design to prevent inadvertent exposure of sensitive data. Companies must also educate their staff about safe collaboration practices when using generative AI tools. This case serves as a critical reminder of the need for strict internal policies to safeguard valuable company information.

Source link

Share

Read more