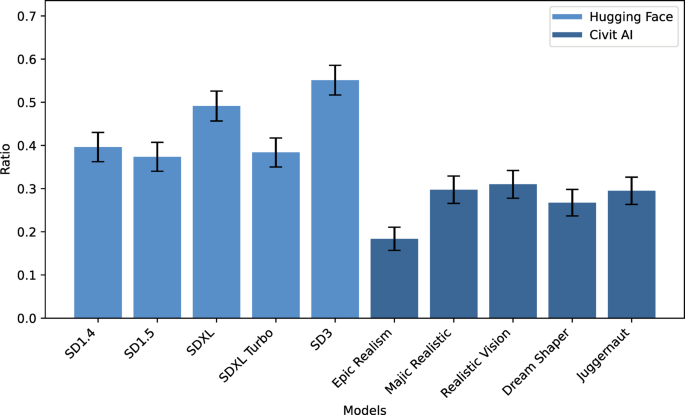

In analyzing ten popular image generation models, we conducted both manual visual and data-driven assessments to evaluate harmful content. Our findings reveal that all models can generate harmful imagery, with a noticeable bias in depicting male and female nudity. Female nudity is often shown in explicit detail, while male depictions sometimes feature inaccurate genital representations. Most nudity represents white individuals, with darker skin tones predominantly linked to violence and gore. The data-driven analysis indicates that over 50% of generated images contain harmful content, with models from Hugging Face generally exhibiting better safety metrics compared to Civit AI. Notably, female prompts yielded a significant amount of NSFW content—69.2%—compared to 30.8% for male prompts. Additionally, biases were evident, as Black individuals are disproportionately associated with violence despite overall underrepresentation. This study highlights the models’ inherent biases, raising concerns about their implications in terms of safety and social representation.

Source link

Share

Read more