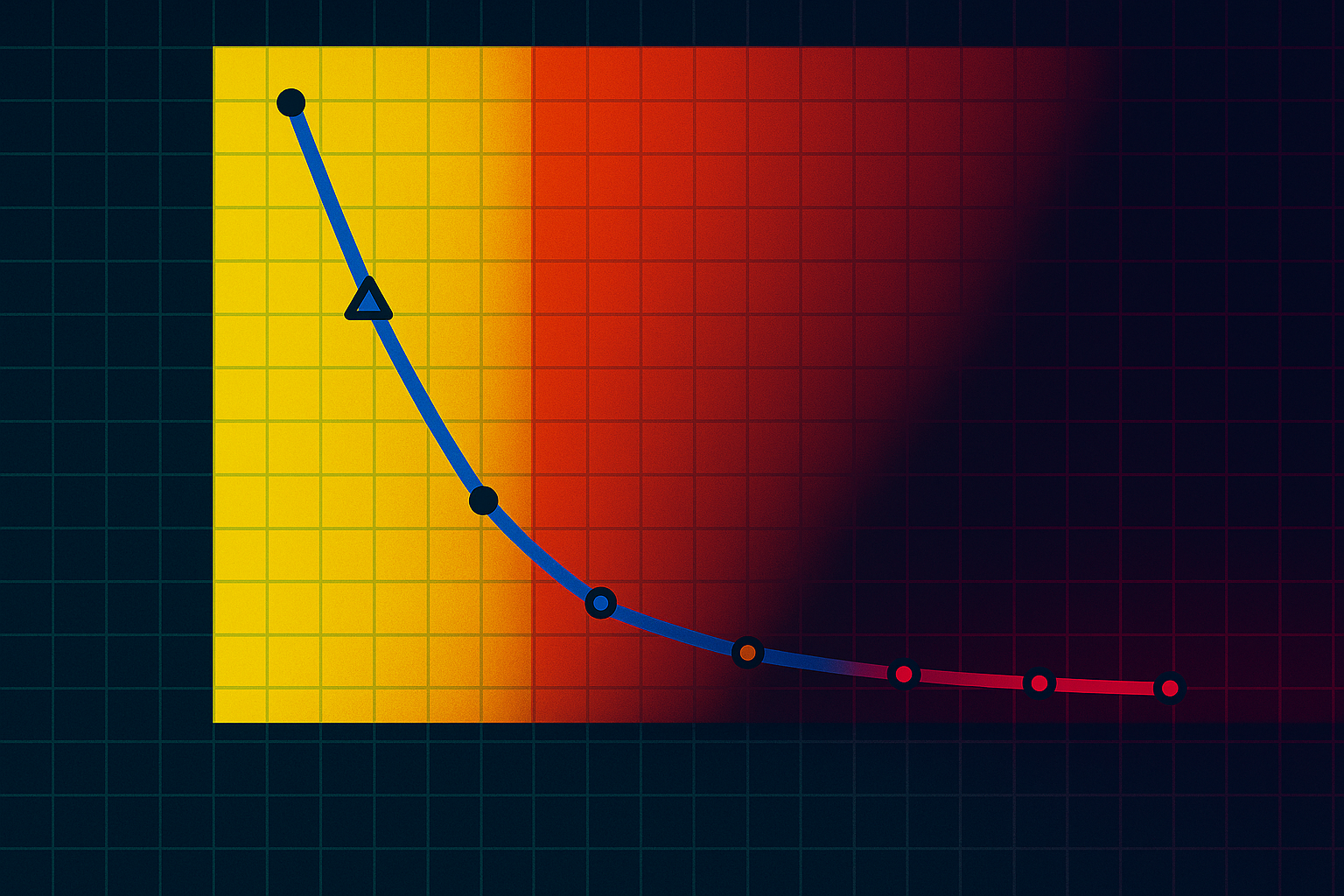

Apple researchers conducted a study revealing significant limitations in reasoning-focused large language models (LLMs) like Claude 3.7 and Deepseek-R1. While these models, equipped with chain-of-thought and self-reflection techniques, aim to tackle complex problems, their performance decreases with task difficulty. The study identified three problem-solving regimes, noting that non-reasoning models excel in simple tasks, while reasoning models only catch up with moderate complexity but falter dramatically at high complexity. Despite showing strength at intermediate levels, all models experienced a performance collapse at higher challenges, often reducing their reasoning attempts. The findings imply that current LLMs lack the ability to develop general problem-solving strategies, relying instead on complex patterns rather than true reasoning. The study criticizes the anthropomorphizing of LLM outputs, emphasizing that they are merely statistical calculations rather than genuine thoughts. As a result, the researchers advocate for a reevaluation of the design principles behind these models to enhance their reasoning capabilities.

Source link

Apple Study Reveals “Fundamental Scaling Limitations” in Reasoning Models’ Cognitive Capabilities

Share

Read more