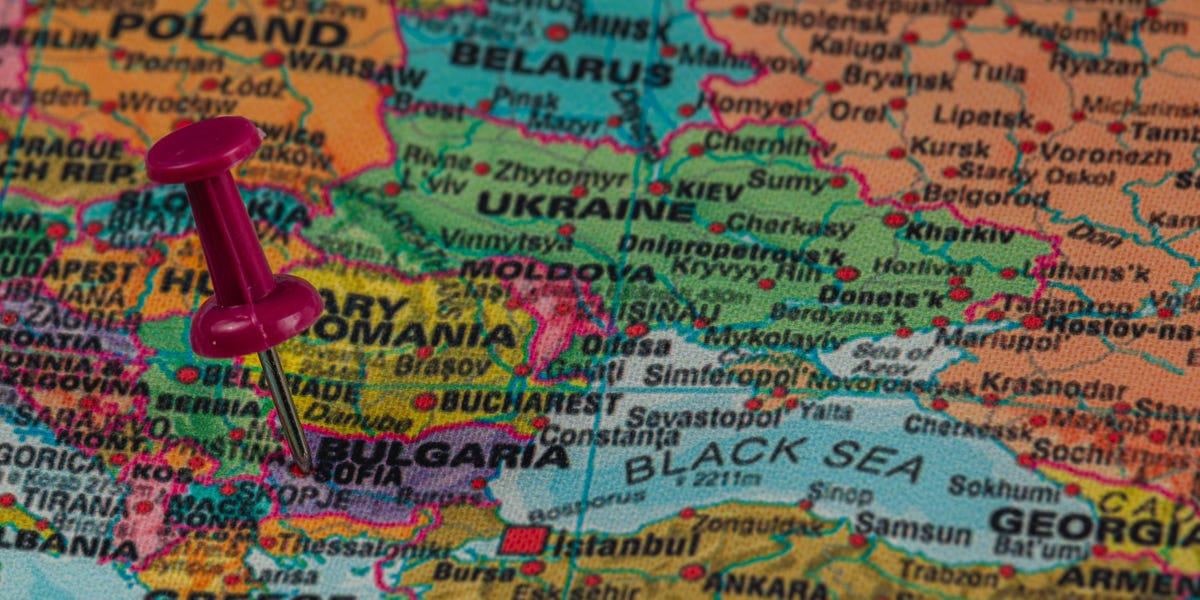

Earlier this year, AI experts, including OpenAI cofounder Andrej Karpathy, discussed using games to assess large language models (LLMs), moving away from conventional benchmarks. Noam Brown suggested the strategic game Diplomacy, which focuses on player interactions. Inspired by this, AI researcher Alex Duffy initiated a project where 18 top AI models competed in a modified version of Diplomacy, dubbed “AI Diplomacy.” This game, set in a politically tense Europe circa 1901, emphasizes alliance-building and deception. Duffy open-sourced the results, revealing varied strategies among the models. OpenAI’s o3 emerged as the winner, leveraging deception effectively, while Google’s Gemini 2.5 succeeded through strategic positioning. In contrast, Anthropic’s Claude struggled due to its overly diplomatic approach. Duffy’s findings highlight the need for innovative evaluation methods, asserting that traditional benchmarks no longer effectively measure AI’s rapid advancements in capabilities. He advocates for diverse testing methods to prepare AI for real-world applications.

Source link

Share

Read more