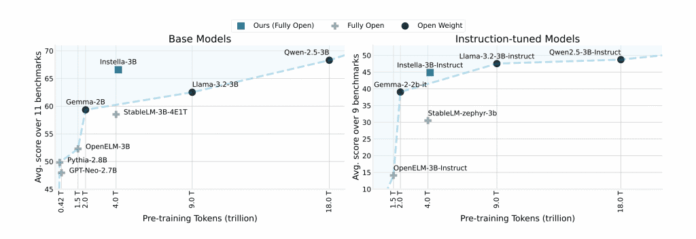

The development of advanced artificial intelligence heavily relies on large language models (LLMs), but access to these models is often restricted due to closed-source designs. To counter this limitation, Jiang Liu, Jialian Wu, and Xiaodong Yu have introduced Instella, a pioneering family of fully open language models trained on publicly available data. Utilizing Instinct MI300X GPUs, Instella reaches state-of-the-art performance among open models, with specialized versions such as Instella-Long for extensive text processing and Instella-Math for tackling complex mathematical reasoning. Their research emphasizes open-source frameworks, enhancing transparency and reproducibility in LLMs. Key resources include comprehensive benchmarks and datasets like BIG-Bench, designed to assess various model capabilities. Instella’s training process, which involves unique synthetic datasets, enables nuanced reasoning across tasks, establishing it as a valuable tool for researchers. By releasing model weights, code, and evaluation protocols, the Instella team fosters community collaboration and innovation in the field of AI.

Source link

Share

Read more