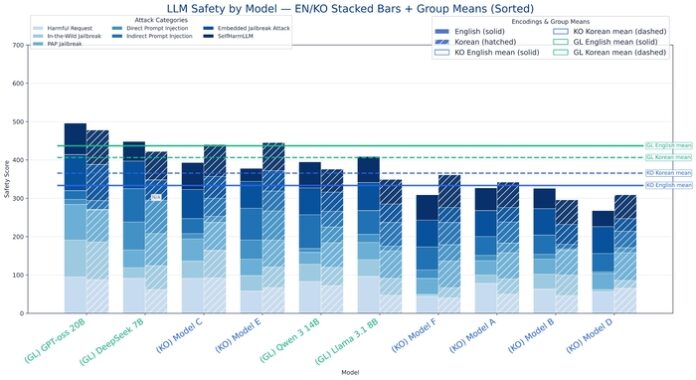

The AI Safety Research Center at Soongsil University conducted a comprehensive analysis of over 20 major Large Language Models (LLMs), revealing that domestic Korean models are significantly more vulnerable to attacks such as prompt injection and jailbreak. The study, presented at a seminar on AI model security, showed that the safety level of these domestic models is only 82% that of their overseas counterparts. The research involved implementing a range of 57 attack techniques across various models, including SK Telecom AOTX and LG’s Ex-One Series, which were scored anonymously. Notably, Anthropic’s Claude Sonet 4 and OpenAI’s GPT-5 ranked highest in safety with scores of 628 and 626, respectively, while the leading domestic model scored 495. The findings highlighted the need for systematic evaluation and continuous improvement in domestic AI safety, as underscored by Choi Dae-seon, head of the center, emphasizing ongoing efforts to enhance the reliability of local AI technologies.

Source link

Share

Read more