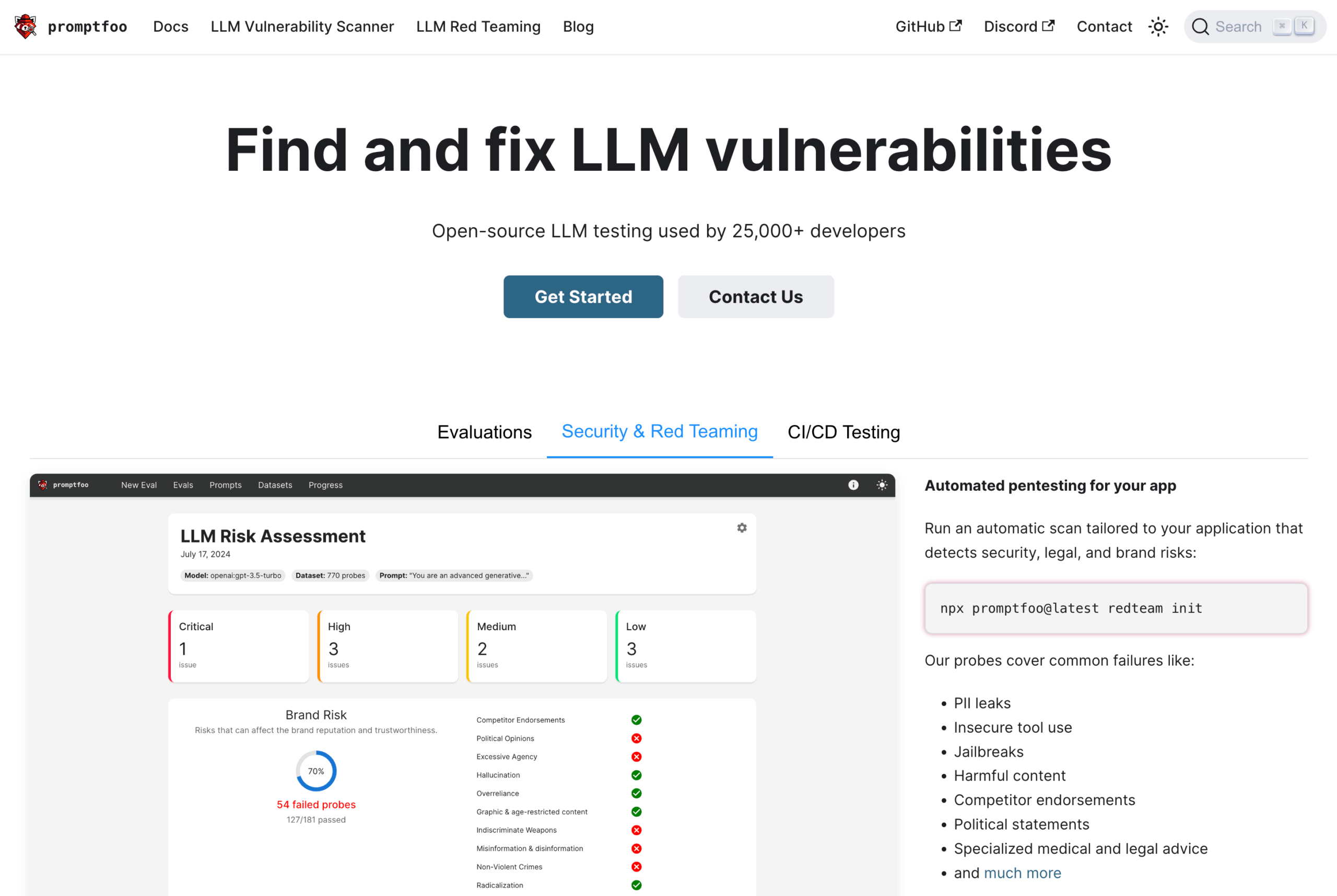

Adaptive red teaming is a targeted approach designed to assess applications rather than just models. By utilizing the command npx promptfoo@latest redteam init, users can generate customized attacks tailored to their specific use cases. The language models employed are capable of identifying various risks within systems, including direct and indirect prompt injections, customized jailbreaks that bypass security measures, data and personally identifiable information (PII) leaks, vulnerabilities related to insecure tool usage, unauthorized contract creation, and the generation of toxic content. This proactive methodology aims to enhance security measures by providing comprehensive insights into potential threats and weaknesses within applications. For more detailed information regarding this effective strategy of red teaming, additional resources are available.

Share

Read more