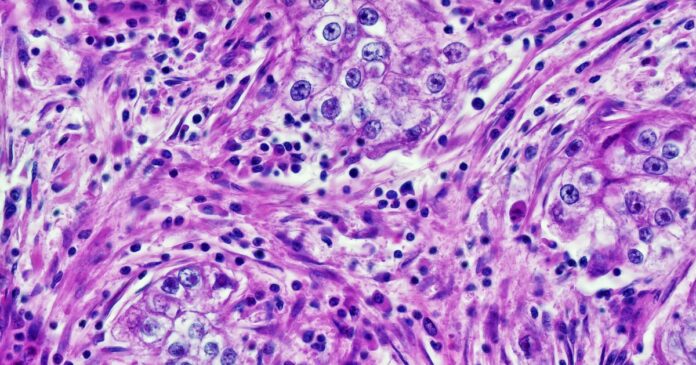

Recent research published in Cell Reports Medicine reveals concerning racial biases in AI systems designed to detect cancer. Harvard University researchers analyzed nearly 29,000 pathology images from 14,400 cancer patients and found that AI tools demonstrated accuracy discrepancies based on demographic factors such as age, gender, and race, affecting diagnostic decisions 29.3% of the time. The AI models could detect racial markers from slides, leading to biased results influenced by training data predominantly from white patients. For example, they struggled with lung cancer cell identification in Black patients due to insufficient representative data. This unexpected bias raises ethical concerns, as accurate pathology evaluations should ideally be objective and unbiased. The researchers introduced a new training method, FAIR-Path, which improved AI performance significantly, eliminating 88.5% of disparities. However, the remaining 11.5% highlights ongoing issues that need addressing to ensure equitable AI development in healthcare.

Share

Read more