Title: Effective Defense Against Prompt Injection in LLMs

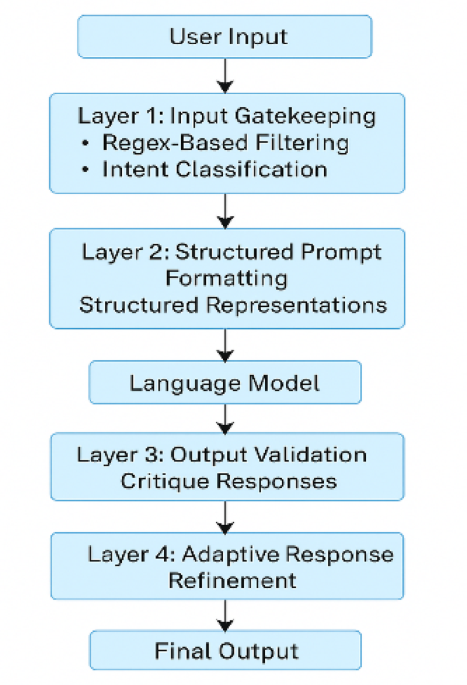

The methodology outlined in this study details a structured, modular workflow for defending against prompt injection in Large Language Models (LLMs). It begins with formal threat modeling to identify potential injection vectors, followed by dataset selection and input preprocessing for normalization. The core defense architecture, named PromptGuard, comprises four layers: input filtering using regex and MiniBERT, structured formatting with role-tagged prompts, output validation through a secondary LLM, and adaptive response refinement. To ensure effectiveness, we utilized three publicly available datasets—including PromptBench, Kaggle’s Malignant dataset, and TruthfulQA—to assess various prompt injection strategies. The output validation stage includes a critic model that evaluates semantic alignment with the original task, while the adaptive refinement layer ensures safety and policy compliance of the responses. This method not only integrates detection and mitigation processes but also enhances interpretability, safeguarding against various prompt injection threats in real-world applications.