OpenAI has launched GPT-5.3-Codex-Spark, its first model to utilize Cerebras Systems’ advanced AI accelerators with ultra-fast SRAM memory. This lightweight model enhances user interactions through rapid response generation, exceeding 1,000 tokens per second. In a recent $10 billion partnership with Cerebras, OpenAI aims to deploy substantial custom AI silicon, supporting its latest GPT models.

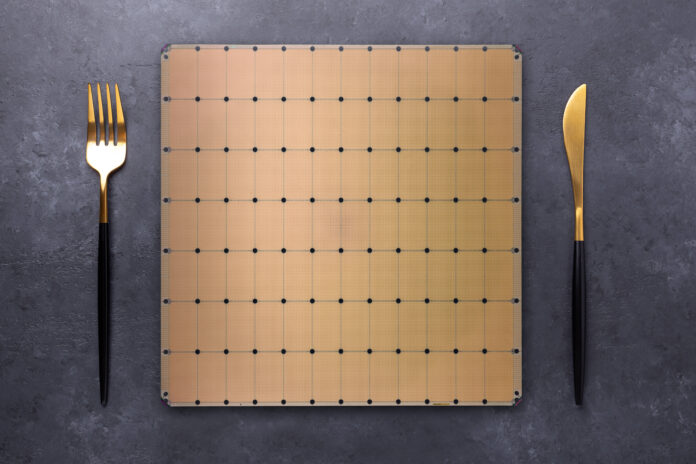

Cerebras’ waferscale architecture delivers impressive speed, outperforming Nvidia’s upcoming Rubin GPUs, but with only 44 GB of memory compared to Nvidia’s 288 GB. Although the Spark model features a 128,000-token context window, it generates responses quickly, making it ideal for code assistance while maintaining accuracy over previous versions. OpenAI emphasizes that while it’s exploring alternatives to GPUs, they remain essential for cost-effective token generation. Currently, GPT-5.3-Codex-Spark is in preview for Codex Pro users and select partners, showcasing a commitment to innovative AI solutions.

Source link