Exploring AI: Can Language Models Understand the World?

A groundbreaking study by Harvard and MIT investigates whether large language models (LLMs) can encode world models, essential for achieving artificial general intelligence (AGI). Here’s what they found:

- Key Question: Can AI models grasp underlying principles (like Newton’s laws) beyond making mere predictions?

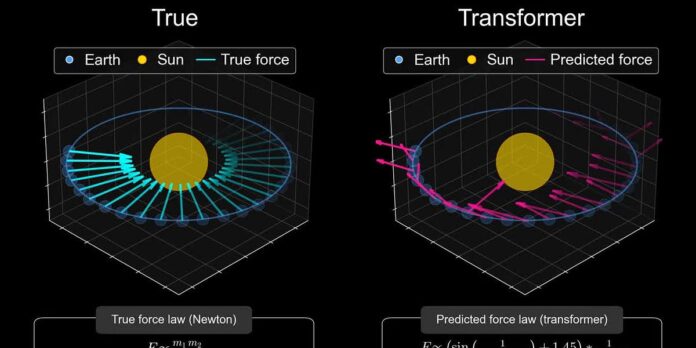

- Methodology: Researchers trained a transformer model on planetary movements, probing its understanding of gravitational forces.

- Findings:

- While the model made accurate trajectory predictions, it failed to encode Newton’s laws.

- This highlights a fundamental limitation in AI: reliance on case-specific heuristics over comprehensive world models.

Implications:

- The inability of LLMs to generate true world models suggests a need for architectural breakthroughs.

- Current models excel at pattern recognition but lack contextual understanding, crucial for generalization.

Understanding this distinction has profound implications for AI development and its future role in scientific inquiry.

Join the conversation! Share your thoughts on how AI can transcend current limitations and lead to a deeper understanding of the world.