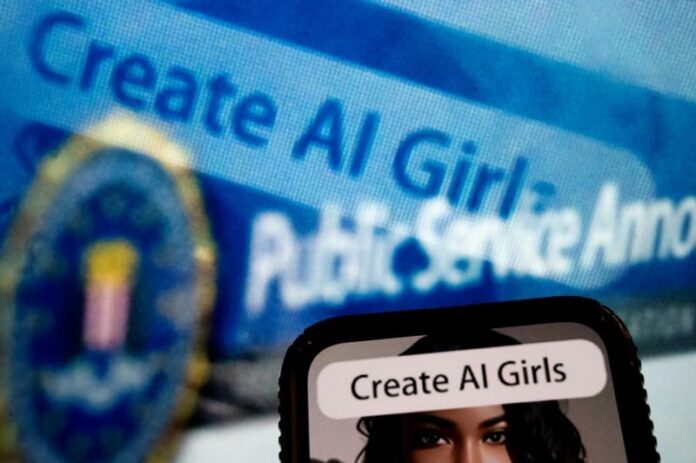

A Kentucky teenager, Elijah Heacock, tragically took his own life after being targeted by a sextortion scam demanding $3,000 to prevent the distribution of an AI-generated nude image. This case highlights a growing global issue where children are increasingly victimized by such scams, fueled by the rise of “nudify” apps that create sexualized imagery. Investigators report a disturbing increase in cases, particularly among boys aged 14 to 17, leading to numerous suicides. Studies indicate that 6% of American teens have fallen prey to deepfake nudes, used by predators for financial blackmail. Despite efforts by platforms and regulators, these programs, which generate harmful content, remain profitable and difficult to eliminate. Recent legal actions, such as the “Take It Down Act” in the U.S. and UK laws targeting deepfake creation, aim to combat this epidemic, but the problem persists, as these malicious tools continue to thrive in the digital landscape.

Source link

Share

Read more