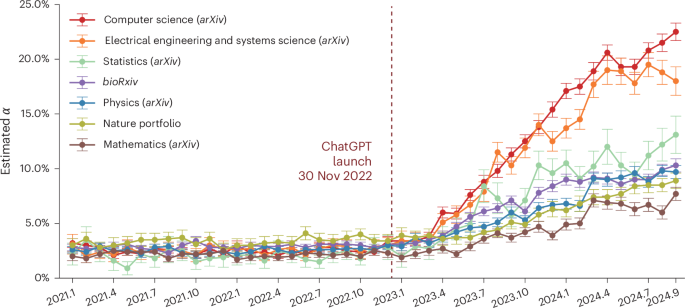

Recent discussions highlight the increasing use of AI tools like ChatGPT in academic research, raising concerns about academic integrity and the reliability of scientific publishing. Articles from sources such as Cybernews and Nature reveal that AI-generated content is infiltrating journals, often leading to calls for stricter monitoring and detection methods. Researchers are developing bias-aware detection tools to address inaccuracies that disproportionately affect non-native English authors. Furthermore, investigations into peer reviews are exploring how AI influences manuscript evaluations. New studies argue that while generative AI can assist in research writing, it should not replace human authorship (Science). The potential disruption caused by AI in academia underscores the need for clear policies and ethical guidelines as the scientific community navigates this evolving landscape. The impact on legitimate research practices could be profound, prompting ongoing debates around authenticity and the future of scholarly communication.

Source link

Share

Read more