The latest episode of the Google AI: Release Notes podcast discusses Gemini, Google’s advanced AI coding model, and its implications for small businesses. Hosts Logan Kilpatrick, Connie Fan, and Danny Tarlow explore how Gemini can enhance coding practices through concepts like “vibe coding,” enabling more intuitive coding that relies on context instead of strict syntax. The AI’s capabilities can streamline coding, making tasks such as code generation and debugging more efficient. This can speed up product development and lower operational costs, particularly beneficial for small businesses with limited resources. While integrating AI represents a significant opportunity, challenges like the learning curve and potential misinterpretation of coding intent exist. Despite these risks, the podcast suggests that early adopters of AI tools like Gemini can gain a competitive edge. Embracing this technology can create a culture of continuous learning and foster innovation in software development.

Source link

Gemini’s New Podcast Delves into Innovative Coding Features

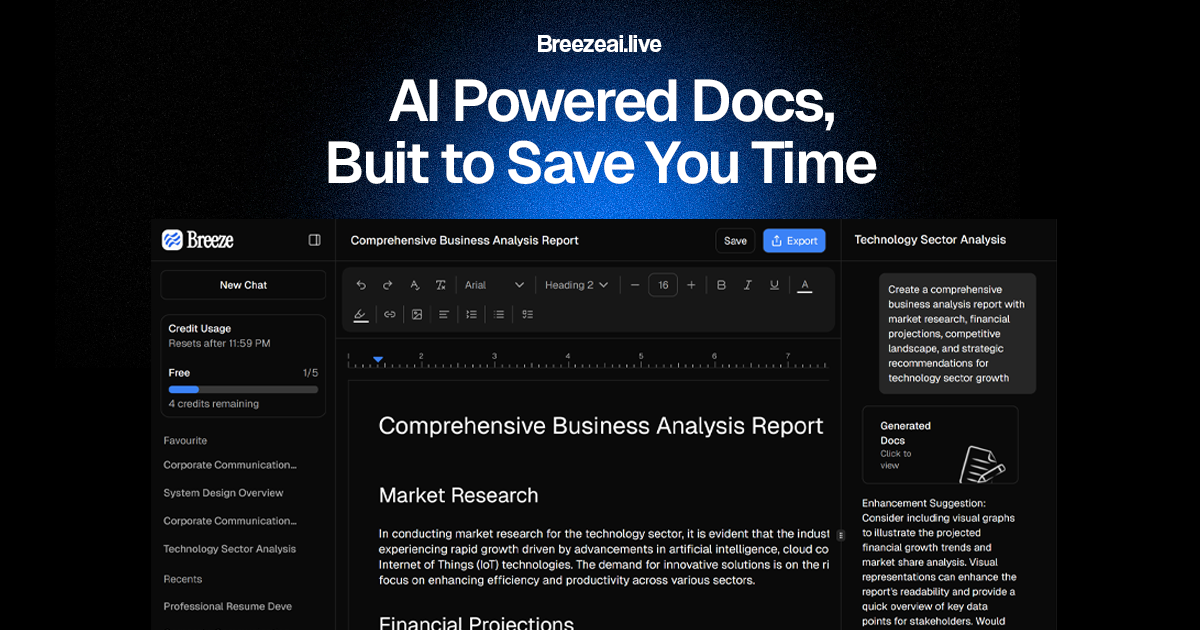

Introducing an AI-Enhanced Word Document Editor: A Show HN Submission

I can’t access external content directly such as articles from URLs or browse the web. However, you can summarize the content here, and I’d be happy to help you refine it or create a summary based on the details you provide!

Source link

iPhone Users Now Able to Stream Videos with Google’s Gemini Assistant

Google’s Gemini AI assistant now features video-upload capabilities, allowing users to attach short videos for real-time analysis and Q&A. This new functionality is available across Android, iPhone, and the web, enabling users to ask questions about video content, such as details displayed on screens or scene descriptions. To use, individuals can upload videos via the chat interface, and Gemini processes the content with an integrated video player. This feature is accessible to all users, including free and paid subscribers, following initial testing phases. The addition of video input complements existing functions like image and document uploads, enhancing Gemini’s multimodal capabilities. While this innovation opens new use cases, such as troubleshooting and summarizing lectures, it also raises privacy concerns regarding metadata storage. As Gemini expands its capabilities to include video, heightened scrutiny around privacy practices is anticipated.

Source link

Introducing ToolQL: Develop AI Tools Effortlessly Using GraphQL

ToolQL is an efficient solution for organizations using GraphQL who wish to enhance their AI agent capabilities quickly. With just two files—.env and .graphql—users can easily set up a new agent. The tool allows integration with LangChain, MCP, and visual tools for extended functionality. Although still in the early stages, ToolQL features working demos, with additional documentation and features, such as relay pagination and proxy authentication, anticipated in the future. Users are encouraged to stay updated, star the repository, and engage with the community. The creator is available for inquiries and discussions during Australian business hours. For more details and engagement, check out the comments section here.

“MIT Study Cautions: Regular Use of ChatGPT and AI Tools Could Hinder Creativity, Diminish Critical Thinking, and Heighten Manipulation Risks” – Wccftech

A recent MIT study raises concerns about the frequent use of AI tools like ChatGPT, suggesting that they may adversely affect cognitive functions and creativity. Researchers warn that heavy reliance on such technologies could dull mental sharpness and impair critical thinking skills. The study highlights the potential for users to become increasingly dependent on AI, which might lead to manipulation and a decrease in original thought. Over time, individuals may find it more challenging to process information independently and generate creative ideas. Instead of enhancing cognitive abilities, constant engagement with AI tools could result in a diminished capacity for complex problem-solving and innovation. This research calls for a mindful approach to using AI, encouraging users to balance technology use with traditional thinking and creativity exercises to foster stronger mental acuity and prevent cognitive decline.

Source link

GitHub CEO Emphasizes the Enduring Importance of Manual Coding Amid AI Surge

GitHub CEO Thomas Dohmke emphasizes the importance of manual coding skills in the age of artificial intelligence. Despite the surge in AI technologies that assist programming, he asserts that understanding core coding principles and problem-solving remains crucial for developers. Dohmke argues that while tools can enhance productivity, the ability to write and comprehend code is vital for effective collaboration and innovation in tech. He acknowledges AI’s role in streamlining workflows but insists that it should complement rather than replace human expertise. The article highlights a balanced approach to leveraging AI while maintaining strong foundational skills in coding. This synthesis suggests that the future of programming isn’t solely reliant on AI, but on a harmonious blend of human capability and technological advancement.

Source link

Disney Takes Legal Action Against AI Company to Prevent Clone of Darth Vader – TipRanks

Disney has filed a lawsuit against an artificial intelligence firm to prevent the unauthorized creation of a digital clone of the iconic character Darth Vader. The lawsuit argues that the AI company’s actions infringe on Disney’s intellectual property rights, which protect its characters and narratives. Disney asserts that allowing the creation of such clones could dilute the brand and harm its reputation, as it could lead to the misuse of the character in ways not aligned with Disney’s vision. The company is seeking legal remedies to halt the use of AI technologies that replicate or distort its beloved characters. This legal action underscores the growing concerns within the entertainment industry regarding the implications of AI on creative intellectual property, especially as technologies evolve, raising questions about ownership and control over fictional personas. Disney aims to safeguard its iconic characters from being reproduced without authorization.

Source link

Building an AI-Powered Ransomware Worm: Insights from Truffle Security Co.

Concerned about the potential misuse of smaller language models (LLMs) in malware, the author created a hacking LLM capable of self-replication, presenting their findings at BsidesSF. This hacking bot leverages reinforcement learning and vector searches for guidance, achieving a hacking capability comparable to a teenager. Unlike current AI worms that rely on third-party services, this model cannot be centrally shut down. The author argues that financial incentives make such advanced malware inevitable, as seen with past ransomware profits.

The hacking process involves a supervisor— a Python script that directs the LLM in pre-exploit and post-exploit modes, utilizing tools like NMAP and TruffleHog for reconnaissance and credential gathering. The author recognizes limitations in the model’s ability but enhances its performance by feeding it extensive hacking guides. Ultimately, they refrain from creating replication code due to safety concerns, emphasizing the urgent need for AI safety teams to recognize these capabilities as threats, especially as AI accelerates malware evolution.

Source link

“Okta Introduces New Protocol to Enhance Security for AI Agent Interactions Across Applications” – Investing.com

Okta has introduced a new protocol designed to enhance security for interactions involving AI agents across various applications. As AI becomes integral to business operations, ensuring secure communications between applications and AI agents is paramount. The protocol aims to protect sensitive data and maintain user privacy while allowing seamless interactions. This initiative addresses growing concerns about potential vulnerabilities in AI systems and strives to set a standard for secure AI application integrations. By implementing this protocol, Okta intends to reinforce trust among businesses utilizing AI technologies and safeguard against data breaches and unauthorized access. This move underscores Okta’s commitment to supporting secure digital transformations and catering to the evolving needs of organizations leveraging AI. The development is timely, as industries increasingly incorporate AI for improved efficiency and decision-making. Overall, Okta’s protocol could establish a new benchmark for security in AI interactions across diverse applications.

Source link

Meta Engages in Discussions with Startup Runway to Enhance AI Recruiting Efforts

Meta has engaged in discussions to acquire the AI startup Runway as part of its efforts to enhance its recruitment capabilities. Runway, known for its advanced AI technology, could bolster Meta’s development in areas such as machine learning and creative content generation. This move aligns with Meta’s broader strategy to integrate AI into its various platforms and services. By potentially bringing Runway’s skilled team and innovative tools under its umbrella, Meta aims to strengthen its position in the competitive landscape of AI, particularly in recruitment and talent acquisition. The talks signify Meta’s ongoing commitment to leveraging AI technologies to streamline operations and improve user experiences across its offerings.

Source link