IBM has introduced an AI-powered tool called the Safer Materials Advisor to help identify and eliminate PFAS (per- and polyfluoroalkyl substances) from its research operations. This tool not only reduces errors but also saves time for employees by screening chemical requests more efficiently. Angela Hutchinson, a chemical coordinator at IBM, noted that the tool can conduct up to three screenings, providing important data on substances and suggesting viable non-PFAS alternatives. The tool’s development is part of a broader initiative called “PFACTS,” which is a collaboration involving Cornell University and other institutions, aimed at mitigating the impact of forever chemicals. Funded by the National Science Foundation, PFACTS has also led to the creation of pfasID, an open-source screening tool designed to streamline the identification of fluorochemicals for various stakeholders, including researchers and policymakers, thereby promoting safer chemistry practices in the industry.

Source link

IBM Unveils Cutting-Edge AI Tool for PFAS Screening

Enhancing Web Interactions: Imagine a Smarter Way to Connect Online

The project presents an experimental, ultra-fast intent router designed for web forms, employing a lightweight machine learning model to classify user inputs like names, emails, and addresses. It aims to streamline form-filling and enhance micro-interactions online, processing inputs in under 20 milliseconds to ensure a seamless user experience. The intent router functions as a “spinal cord” for micro-decisions, distinguishing it from traditional chatbots or large language models. This tool can be implemented as a web widget, browser extension, or backend API. The project includes various Python scripts for model training, a web frontend for demonstrations, and JavaScript files for inference and user input routing. Overall, the intent router seeks to render online interactions more efficient and nearly invisible, elevating user engagement by simplifying input processes. Users can easily execute the backend and demo applications following brief setup instructions provided in the documentation.

Source link

Gemini AI by Google Forecasts the Timing of the Next Crypto Bull Run

Google’s Gemini AI predicts a surge in cryptocurrency prices later this year, despite current geopolitical tensions affecting the market. Bitcoin’s price briefly dropped below $100,000 amid the US’s involvement in the Israel-Iran conflict but has since rebounded to $101,000, suggesting market resilience. Gemini believes Bitcoin could be seen as a safe-haven asset, especially if military escalations provoke central banks to implement quantitative easing, potentially boosting cryptocurrency values. Predictions indicate Bitcoin could reach $250,000, while Ethereum might exceed $10,000, fueled by growing institutional interest and ETF developments. Additionally, the BTC Bull Token is projected to achieve a near-40x return by 2025, presenting a significant opportunity for investors. The presale has already garnered over $8 million, but it concludes soon, urging potential buyers to act quickly. Ultimately, while the market remains volatile, observers are cautiously optimistic about a future bull run.

Source link

I Captured Your Soul in a Trading Card: A Journey with Client-Side AI

In this detailed article, Jacob shares his journey developing Summon Self, an innovative app that transforms users into trading cards with personalized stats, graphics, and rarity. The app utilizes AI for generating unique attributes based on user photos, employing technologies like MobileCLIP and CoreML for image classification, alongside Metal Shaders for graphical effects. The trading cards can be exchanged in real life, promoting social interaction. Jacob, motivated by his childhood fascination with the Yu-Gi-Oh franchise, emphasizes a fun, engaging user experience. The process involved several iterations from concept to proof of concept, focusing on features like customizable card titles and stats. With a user-friendly interface crafted using SwiftUI and the Vision framework, the app aims to create a collectible experience akin to Pokémon cards. Jacob invites feedback and aims to enhance the app for a broader audience, envisioning it as a future viral success. Users can download it on the App Store and share their creations.

AI Impact Awards 2025: Transforming Employee Satisfaction and Innovation Through AI Management Tools

The workplace impact of AI is significant, with expectations for rapid growth due to advancements like ChatGPT. AI tools now automate tasks, such as meeting summaries and performance feedback, helping employees save time and focus on strategic work. Companies like Zoom, Pfizer, and Betterworks received Newsweek’s AI Impact Awards for pioneering innovations that enhance collaboration, reduce meetings, and improve performance management. For instance, Betterworks’ AI tool improved performance review completion at LivePerson by 30%, providing clearer feedback for employees. Zoom’s AI Companion enables richer meeting experiences and summarization, reducing the need for excessive meetings. Meanwhile, Pfizer’s AI Academy trains employees on AI tools, achieving a notable ROI and improving user engagement. This comprehensive approach aims to alleviate manager burdens and foster a more efficient and engaged workforce, illustrating AI’s potential to transform workplace dynamics.

Source link

John Oliver Warns: “AI Slop Could Be Potentially Dangerous”

On his HBO show, John Oliver highlighted the societal dangers of AI, dubbing the proliferation of AI-generated content as “worryingly corrosive.” He introduced the term “AI slop” to describe the influx of bizarre, professional-looking media on social media, which often misleads users into believing it’s real. Oliver noted that AI tools have lowered the barrier for content creation, allowing odd images and videos to flood feeds without user consent, especially with Meta’s algorithm changes. He emphasized the monetization aspect, where viral content can earn creators small amounts or, in rare cases, substantial sums. The environmental toll of AI production and the potential for misinformation were significant concerns, exemplified by fabricated disasters that distort reality, as seen during conflicts and natural calamities. Oliver warned that while some AI content may be entertaining, its existence undermines objective reality, complicating the detection of genuine news and endangering informed discourse.

Source link

Harnessing AI Today: Your Essential Quick Guide

The landscape of AI tools has evolved, making it essential for users to choose the right system. The top contenders include Anthropic’s Claude, Google’s Gemini, and OpenAI’s ChatGPT, each offering powerful capabilities such as voice interaction, image and video creation, and deep research features. While many advanced features come with a monthly cost, selecting one of these systems is generally advantageous for serious users. Picking the right model is important; they range from casual to powerful versions, depending on the task at hand. Factors like privacy vary—Claude doesn’t train on user data, while others might. Exploring features like Deep Research can enhance productivity, yielding detailed reports. Engaging with AI in a conversational manner, providing context, and experimenting with different prompts can lead to better outcomes. Ultimately, effective AI use involves understanding and utilizing its complex features rather than relying on surface-level interactions.

Source link

OpenAI and Jony Ive’s Hardware Ambitions Face Legal Setback

OpenAI’s hardware collaboration with Jony Ive has been temporarily halted due to a trademark infringement lawsuit filed by Iyo, a company developing AI-powered ear-worn audio devices. Iyo claims that OpenAI’s use of the name “io” for its hardware division creates confusion, given their similar product focuses. The lawsuit seeks to prevent OpenAI from using this name, prompting the removal of all mentions of it, including a promotional video. OpenAI, while acknowledging the court’s order, stated that it disagrees with the complaint and is exploring its options. Despite these legal challenges and the immediate setback regarding branding, OpenAI’s broader hardware ambitions remain intact, indicating that they may potentially adopt a new name before launching their products.

Source link

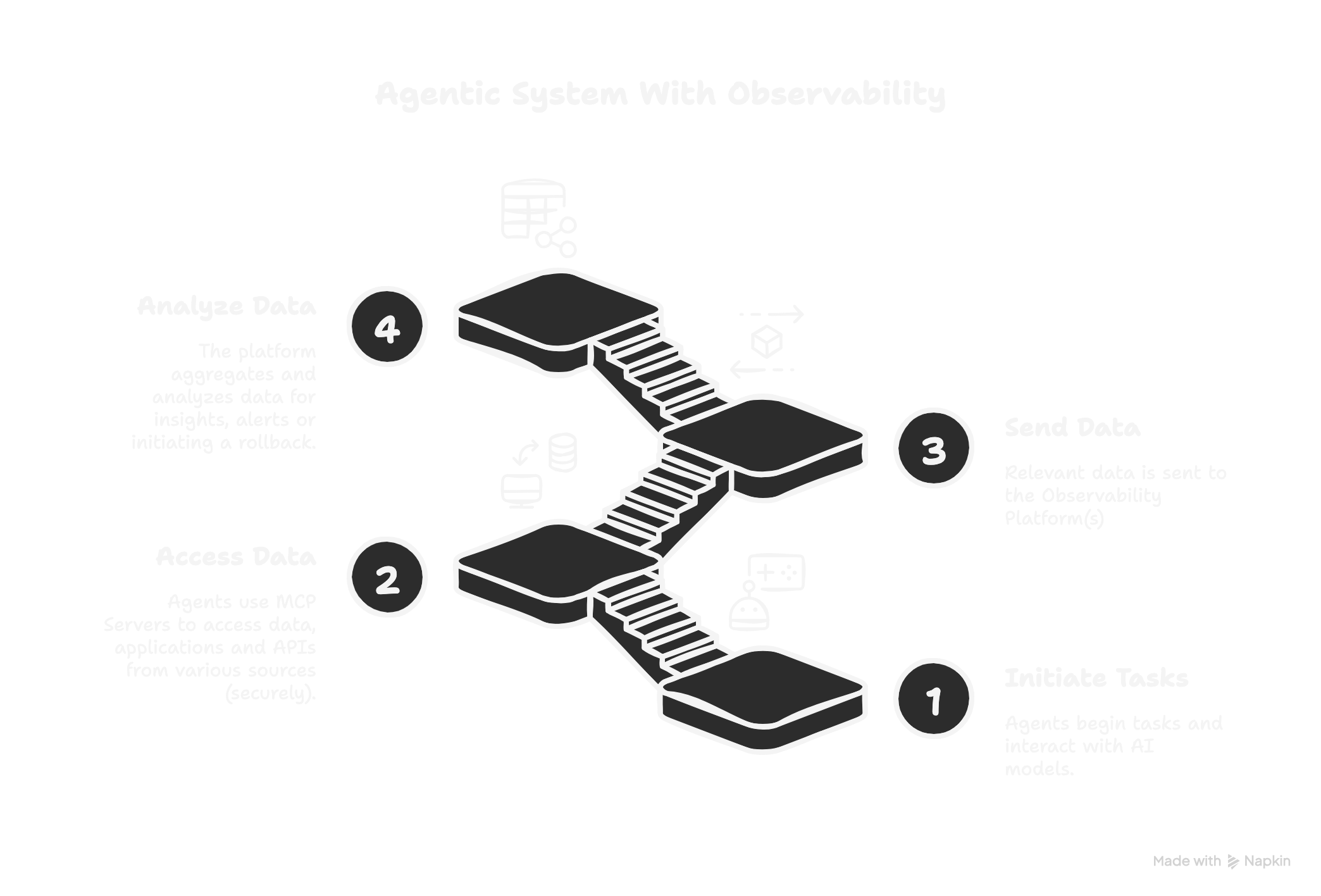

Essential Steps to Take Before Creating an Agent

As teams rush to develop AI agents, they often overlook critical foundational aspects vital for their safe and effective operation. An AI agent is not merely a function invoking a language model; it’s a complex system requiring thorough groundwork in observability, evaluation, and rollout control to prevent degradation or unintended harm in production. To build robust agents, several key practices should be implemented:

-

Eval-First Pipeline: Continuous evaluation and structured logging ensure that agent behaviors evolve responsibly.

-

Advanced Observability: Track prompts, contexts, and user feedback to preemptively address issues.

-

Permission-Aware Rollouts: Use gradual deployment strategies, like canary and blue/green deployments, to manage elevated access securely.

-

CI/CD Guardrails: Fast iteration and safety checks must be integrated into the development pipeline.

- Zero-Trust Approach: Treat all external APIs and tools as potentially vulnerable.

StarOps aims to provide the infrastructure necessary for building and maintaining AI agents effectively.