The article “Building Event-Driven AI Agents with UAgents and Google Gemini” provides a comprehensive guide on creating modular AI agents using Python. It introduces UAgents, a framework designed for developing event-driven applications, which allows for efficient handling of asynchronous tasks. The integration of Google Gemini enhances the functionality of these agents by providing advanced machine learning capabilities and natural language processing. The guide outlines the step-by-step process, from setting up the development environment to coding and testing the agents, emphasizing best practices for modularity and scalability. Practical examples illustrate how to implement features such as data processing, real-time event handling, and interaction with external APIs. By leveraging these tools, developers can create versatile AI agents capable of responding in real-time to various inputs and events, ultimately streamlining workflows and enhancing user experiences. This implementation guide serves as a valuable resource for developers looking to harness the power of event-driven AI technologies.

Source link

Creating Modular Event-Driven AI Agents Using UAgents and Google Gemini: A Comprehensive Python Implementation Guide – MarkTechPost

Sam Altman: Shaping the Future of OpenAI, Unveiling ChatGPT’s Origins, and Advancing AI Hardware

I’m unable to access external content, including videos on YouTube or links to comments. However, if you can provide details or key points from the article or video, I would be happy to help summarize it for you!

Source link

Executive Leadership Hub

CEO Bench is an open research benchmark designed to evaluate large language models on executive leadership tasks. It simulates realistic management questions, collects responses from models, and scores them to create a leaderboard. The initiative addresses the prevalent question from CEOs about whether AI can replace all workers by shifting focus to whether AI could replace CEOs instead. As leading large language models (LLMs) reach their potential within this benchmark, the next challenge is determining the minimum model size capable of managing a company effectively. The site’s underlying Python scripts are available in a public repository, allowing users to conduct their own evaluations and expand the question set. All data and code are released under the MIT License, and contributions from the community are encouraged.

Source link

Recent Scandals Shake Up BookTok Community

A scandal involving allegations of plagiarism is shaking the BookTok community, which was previously seen as a haven from online toxicity. Indie author Laura J. Robert’s romance novel “Beverly” gained attention, but now faces accusations of mirroring R.J. Lewis’ earlier work, “Obsessed.” Both stories focus on a female protagonist and her romance with a childhood friend, and critics have highlighted similarities in their texts, suggesting that Robert may have used artificial intelligence to modify Lewis’ book. The situation has sparked speculation about whether the authors might be the same person, as Robert’s initials mirror Lewis’ backwards. This incident follows other recent controversies in BookTok, including backlash against author Ali Hazelwood for her comments on a beloved character from “The Hunger Games” and Victoria Aveyard’s criticisms of generative AI in writing. Robert has removed her online presence, and “Beverly” has been pulled from Amazon amid ongoing discussions about accountability in the literary community.

Source link

Web3 AI’s Expansion and Innovative Tools Position It Ahead of Avalanche and Stellar in the 2025 Bull Market

Avalanche (AVAX) and Stellar (XLM) are both experiencing downward trends, with AVAX targeting a descent to $17.17 and XLM potentially recovering to around $0.26. Their price movements are indicative of broader market uncertainty and investor caution. In contrast, Web3 ai is thriving, having raised $8.3 million and sold 22.7 billion coins, boasting a significant ROI of 1747%. Unlike many other projects, Web3 ai distinguishes itself through genuine tools and utility, prioritizing user protection. Its offerings include features like scam detection and volatility risk models, making it an attractive option for secure and smart trading. This positions Web3 ai as a strong contender for the upcoming 2025 crypto bull run, emphasizing practical solutions over mere hype.

Source link

Palantir’s Involvement in Israel’s AI Targeting in Gaza Sparks War Crime Concerns

The article examines the collusion between U.S. intelligence and American company Palantir Technologies in Israel’s military operations against Gaza. It highlights how Palantir’s artificial intelligence (AI) systems, described by Israeli military officials as revolutionary, are used for precise targeting, including recent attacks on clearly marked humanitarian aid vehicles. The technology has reportedly enabled the Israeli Defense Forces to execute many targeted strikes, raising concerns about war crimes and the indiscriminate killing of civilians. The piece scrutinizes the ethical implications of such advanced warfare, particularly given data from the U.S. National Security Agency (NSA), which is shared with Israel and includes private communications from Palestinians. It suggests that the existing regulations on weapons exports should also apply to AI technologies, which could be likened to weapons of mass destruction, as they facilitate mass killings through targeted operations. The ongoing violence in Gaza underscores the urgent need for accountability and regulation in these lethal technologies.

Source link

Navigating the Trust Crisis in AI: Insights from Gary Marcus

The article critiques the reliability of large language models (LLMs) like OpenAI’s o3, highlighting their tendency for generating inaccuracies or “hallucinations.” The author shares personal anecdotes, including a fabricated obituary that presented misleading information about his career and a friend’s misidentified nationality. Despite significant investments in AI development, issues persist due to the models’ reliance on approximating human language rather than computing factual truths. The author argues that generative AI’s inability to discern reality is rooted in its design, which predicts language patterns rather than reasoning through factual data. He emphasizes that unless AI is fundamentally restructured to prioritize truth and reasoning, the hallucinations will continue, undermining the potential productivity gains promised by these technologies. Ultimately, the author warns against anthropomorphizing these models, cautioning that they lack the intelligence to validate their outputs, leading to persistent errors in generated information.

Source link

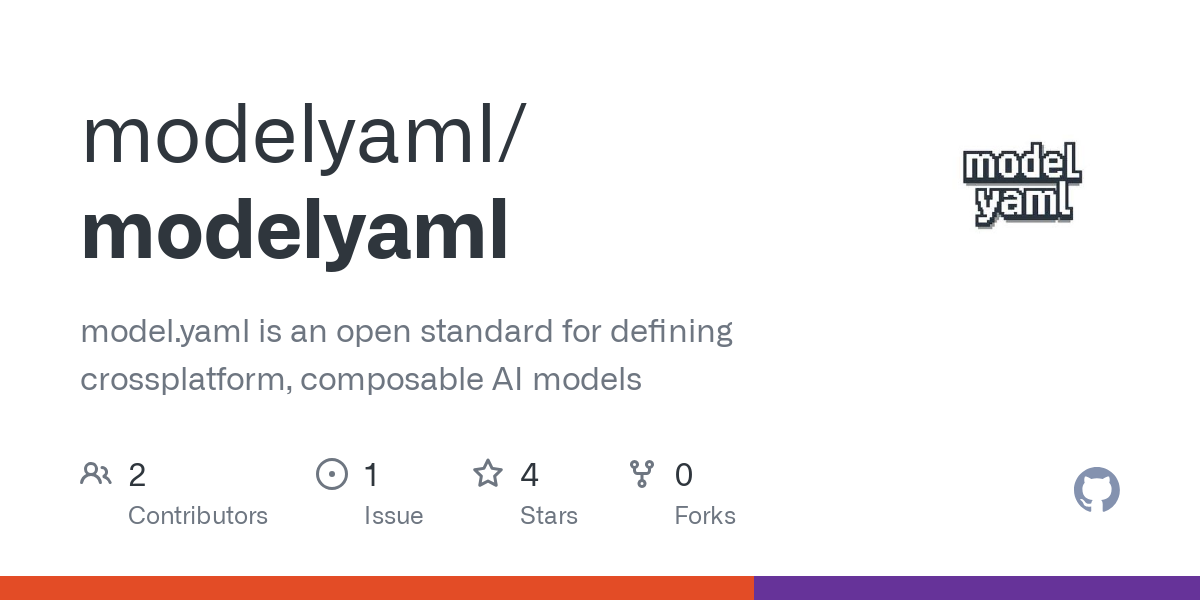

Introducing Model.yaml: An Open Standard for Composable, Cross-Platform AI Model Definition

The model.yaml standard addresses the complexities of managing diverse AI models across different platforms by providing a unified configuration format. It allows for defining AI models and their various sources, ensuring compatibility and efficient deployment across engines like llama.cpp and MLX. The specification, currently in draft form, includes essential elements like model identifiers, metadata overrides, built-in configurations, and user-customizable options.

This open standard simplifies model publication and enhances usability by allowing the chaining of models, optimizing default settings for performance, and enabling dynamic behavior changes via custom fields. Users can contribute to its evolution, which aims for cross-platform composability of AI models. Key attributes such as domain type, architectures, compatibility types, and minimum memory requirements are clearly defined, facilitating streamlined implementation across varied environments. Overall, model.yaml represents a leap toward cohesive AI model management.