Oracle has reached an all-time high in extended trading following a significant increase in bookings and a robust outlook for its cloud infrastructure. Known for its database software, Oracle is gaining traction in the competitive cloud computing market. Recently, it secured a landmark agreement with OpenAI for 4.5 gigawatts of data center capacity, capable of powering millions of homes in the U.S. Prominent clients, including TikTok and Nvidia, have contributed to a surge in remaining performance obligations, which hit $455 billion at the end of the fiscal first quarter—over four times higher than last year. As a result, Oracle’s shares skyrocketed more than 26% in after-hours trading, closing at $241.51. If these gains persist, it could surpass its previous record high of $256.43. Notably, Oracle’s stock has risen 45% this year, significantly outperforming the S&P 500.

Source link

Oracle Stock Soars 26% Fueled by Cloud Expansion and OpenAI Partnership

Worldcoin (WLD) Approaches $4B Market Cap Amid Sam Altman’s OpenAI Securing $300B Oracle Partnership

Worldcoin (WLD) surged over 107% this week, fueled by OpenAI’s $300 billion computing power deal with Oracle. Meanwhile, Ethereum treasury firm Bitmine made a strategic investment in Worldcoin’s treasury, announcing a $250 million private placement. Tom Lee, Chairman of Bitmine, emphasized the alignment of WLD, an ERC-20 token, with Ethereum, citing its potential for enhancing trust in technology platforms. As of Wednesday, WLD traded above $1.8, boasting a market cap of $3.68 billion. Investors remain optimistic, eyeing a market valuation of $4 billion, supported by a bullish breakout from a multi-month falling wedge pattern, reinforcing robust buying momentum. Immediate resistance lies at $2.50; a clean breakout could lead WLD towards a long-term target of $9. Additionally, Best Wallet (BEST), an innovative multi-chain storage solution, is gaining traction in the market. Investors can still secure discounted tokens in its presale, which has raised over $15.5 million.

Source link

Prophet Security Report Reveals Critical Demand for AI in Security Operations Centers

Security Operations Centers (SOCs) are currently overwhelmed, according to the State of AI in Security Operations 2025 report by Prophet Security. With nearly 300 security leaders surveyed, findings reveal that 40% of alerts are uninvestigated, resulting in significant breaches. The alert dwell time averages around an hour, leaving SOCs grappling with high volumes of false positives, leading to burnout and low morale among analysts, whose average tenure has dropped to just 12 months.

Co-founder Grant Oviatt emphasizes the importance of artificial intelligence (AI) as a transformative force, allowing analysts to focus on high-value tasks by automating low-level investigations. The report also noted that data privacy concerns, rather than fears of job loss, hinder AI adoption. Security teams must prioritize transparency and compliance to gain trust in AI solutions.

Ultimately, measuring the return on investment (ROI) in AI-enabled tools is essential for securing executive buy-in, demonstrating reduced breach risks and enhanced operational efficiency.

Streamlined AI Equation?

Unlocking the Future of AI Behavior! 🚀

Delve into the fascinating world of Artificial Intelligence as I share a groundbreaking formula for AI behavior representation:

A(t) = f(C(t), S(t), R(t), M_meta(t), M_memory(t)). This insight invites discussion and interpretation, aiming to spark deeper understanding within the tech community.

Key Highlights:

- Compact Representations: Learn how to simplify AI behavior models.

- Engage with the Formula: Explore its components—context, structure, responses, and memory.

- Interactive Discussion: Your insights and interpretations are essential!

This formula represents not just a mathematical expression, but a pathway to discussing the implications of AI in our lives. Join the conversation and bring your perspectives to the forefront!

💬 Join me by sharing your thoughts! Let’s connect and unravel the complexities of AI together. Comment here!

OpenAI Allegedly Faces $300 Billion Bill from Oracle Cloud, According to The Register

OpenAI is poised to enter a $300 billion agreement with Oracle over five years, providing crucial compute capacity to advance Sam Altman’s AI initiatives. This contract, starting in 2027, aims to secure five gigawatts of compute power, essential for the company’s growth, yet raises concerns about funding, as OpenAI projects a profit only by 2029. In addition to this, SoftBank is reportedly investing $19 billion in the OpenAI-led Stargate project, which focuses on constructing large AI data centers. Recently, Oracle’s commitment to OpenAI saw a rise in its share price by over 30%, signaling strong investor confidence. Oracle’s CEO forecasts a significant revenue increase for its cloud infrastructure business, expecting annual revenues to rise from $18 billion today to $144 billion by 2031. As this agreement unfolds, the sustainability of OpenAI’s financial strategy remains a critical focal point for investors and industry observers alike.

Source link

Google Unveils Gemini’s Daily Limits for Prompts and Image Generation

Google has clarified its Gemini AI usage limits, eliminating vague terminology and specifying daily allowances for both free and paid users. Free Gemini 2.5 Pro users are entitled to five prompts per day and a 32,000-token context window. The AI Pro plan, priced at $20/month, increases the limit to 100 prompts and offers additional features such as a 1 million-token context, 1,000 image generations, and 20 Deep Research reports daily. The more advanced AI Ultra plan, costing $250/month, allows for 500 prompts and enhances features with 200 Deep Research reports and five Veo 3 video creations each day. These straightforward updates aim to help users understand what they get with each subscription level, ensuring they can choose the most suitable option based on their needs. Users can find detailed information in Google’s Help Center article, “Gemini Apps limits & upgrades for Google AI subscribers.”

Source link

Unraveling the Four Misconceptions of Modern AI

Navigating the Hyped Landscape of AI: Insights and Frameworks

In the fast-paced world of Artificial Intelligence, the journey can feel like a wild ride, oscillating between extraordinary breakthroughs and alarming setbacks. My recent exploration distills this complexity into actionable insights:

- Understanding Hype: AI’s narrative swings between promises of cure and cautionary tales of collapse, with cycles of boom and bust shaping the field.

- Critical Framework: I leverage Melanie Mitchell’s four foundational fallacies to dissect AI’s hype, examining their implications on society and the economy.

- Real-World Applications: These fallacies illuminate why our perceptions of intelligence are often misleading, distorting expectations and public trust.

The crux of progress involves harmonizing scaling methods with deep cognitive understanding. Bridging these paradigms can lead to a more responsible and grounded AI landscape.

Join the conversation! How do you navigate the complexities of AI in your work? Share your thoughts and insights below! 🔗 #AI #ArtificialIntelligence #TechInnovation

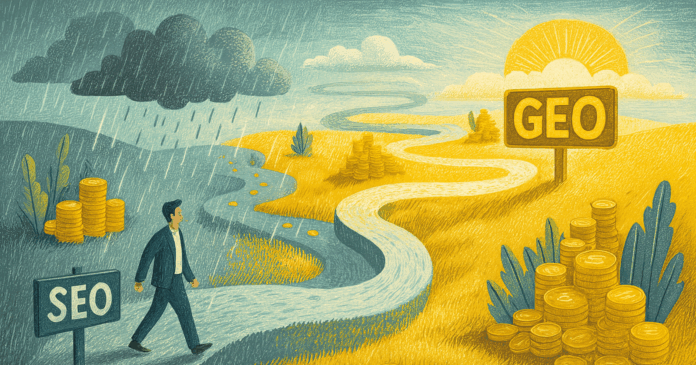

Establishing Your Agency as a Leading Authority in AI Search

Businesses increasingly face the challenge of visibility in AI search, with Google’s AI Overviews causing a significant drop in organic traffic—by 30-70%. Agencies that adopt Generative Engine Optimization (GEO) strategies are elevating their service offerings and charging substantial fees. To navigate this evolving landscape, an AI Visibility Audit is essential. This process involves assessing key phrases against AI search engines like Google and ChatGPT, identifying visibility gaps and competitor citations.

Interpreting audit results helps agencies highlight their clients’ standing—whether highly visible, partially visible, or absent. Connecting insights from the audit to traffic changes is crucial for client communication. Implementing GEO tactics, such as improving on-page SEO, enhancing entity clarity, and optimizing technical aspects, is essential for regaining visibility.

Visto’s platform streamlines monitoring and reporting, making it easier for agencies to provide measurable results and stay ahead of AI search trends. Immediate action can position agencies as experts, helping clients cope with the AI shift effectively.

Source link

Datacom Identifies AI Agents as Key Drivers in Legacy Application Modernization – ARN

Datacom is at the forefront of AI implementation in Australia and New Zealand, ensuring data sovereignty and governance while processing AI workloads. By deploying AI agents under human supervision, the company maintains high security and quality standards. These agents automate documentation and validation processes, enhancing compliance and audit readiness, according to Datacom’s Macfarlane. The structured approach minimizes disruptions while ensuring quality control, similar to traditional access management limited to necessary data access.

Lou Compagnone, Datacom’s AI director, emphasizes that the integration of agentic AI with software engineering teams is revolutionizing application modernization. AI agents expedite coding and testing processes, previously taking weeks or months down to mere days. However, robust oversight guarantees accuracy and adherence to Datacom’s standards. By embedding agentic AI into its delivery models, Datacom showcases how AI can drive rapid transformation without compromising trust and governance, positioning itself as a leader in the industry.

Source link