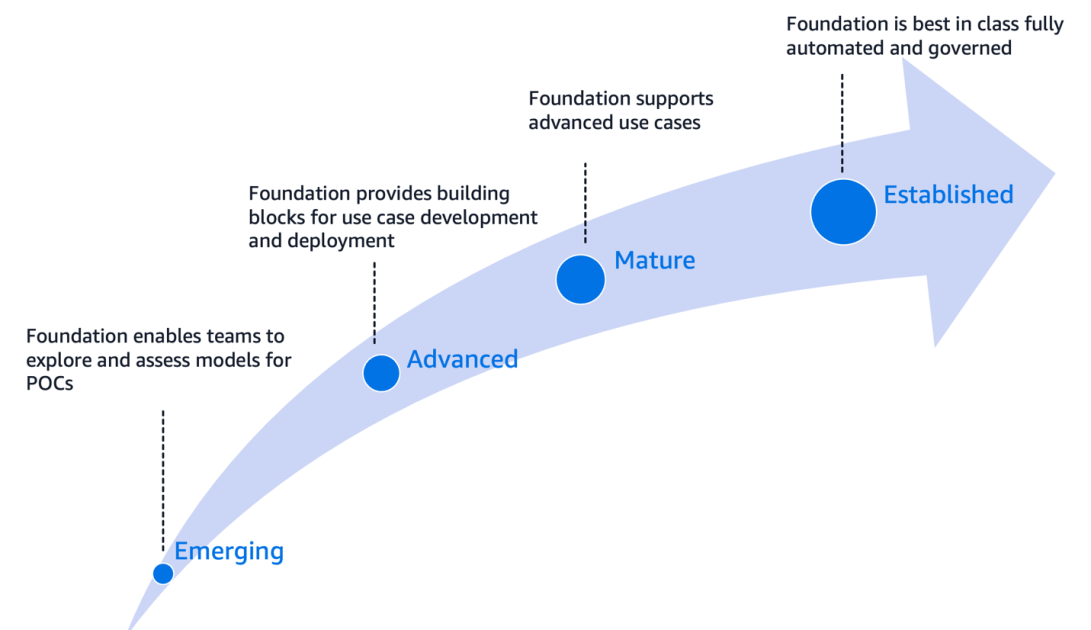

Generative AI applications, while appearing straightforward, involve complex workflows integrating foundation models (FMs), APIs, and domain-specific data, necessitating robust safety controls and operational elements like CI/CD. Organizations often face fragmentation from siloed generative AI initiatives, leading to inefficiencies and inconsistent governance. To counter this, a centralized, unified approach is increasingly adopted, allowing departments to utilize foundational services efficiently, enhancing scalability and innovation while reducing costs. A generative AI foundation comprises crucial elements such as model and tool hubs, gateways for secure APIs, orchestration of workflows, model customization, data management, and observability tools. Key practices like GenAIOps focus on managing generative AI operations, emphasizing model governance and operationalization of training processes. Establishing a generative AI foundation enables organizations to navigate unique challenges effectively, paving the way for successful AI adoption across various scenarios. This maturity model allows assessment of development progression, ultimately facilitating a well-structured generative AI landscape within enterprises.

Source link

Build a Robust Generative AI Framework on AWS

The Impact of Meta’s AI-Driven Ad Tools on Creative Agencies

Meta’s goal to fully automate ads with AI by 2026 poses challenges for creative agencies, making client retention and new business acquisition more difficult. The plan allows brands to create and target ads with AI, reflecting broader industry trends among platforms like Google and TikTok that are embracing AI-driven advertising. While executives recognize the need for adaptation, they emphasize that AI still requires human oversight, as it may not replicate the strategic depth and storytelling that agencies provide. Many agencies are integrating their own AI tools to enhance offerings, positioning themselves as valuable intermediaries between brands and automated systems. However, concerns remain about the quality of AI-generated content and the risk of brand differentiation fading into “ad slop.” Ultimately, while AI will change the creative landscape, it does not eliminate the role of agencies; rather, it compels them to redefine their value and focus on high-level strategy and narrative creation.

Source link

The Frightening Truth About AI: Companies Lack Full Understanding of Their Models – Axios

In a recent report by Axios, concerns are raised about companies’ lack of understanding regarding their artificial intelligence (AI) models. Many organizations leverage AI for various purposes but often do not grasp how these models function, leading to potential risks and misapplications. This lack of transparency can create issues, including biased outcomes and unintended consequences, as decision-making is increasingly delegated to AI systems. The report emphasizes that as AI technology evolves, it is crucial for companies to improve their understanding and oversight of these systems. Failure to do so may result in ethical dilemmas, compliance issues, and damage to public trust. In light of these challenges, experts advocate for greater accountability and regulatory frameworks to ensure that AI is used responsibly and effectively. Overall, the report highlights the need for organizations to bridge the knowledge gap in AI to mitigate risks and harness its benefits effectively.

Source link

Former Apple Employees Discuss Why LLM Siri Still Isn’t Ready Ahead of WWDC 2025

Just before Apple’s WWDC 2025 event, insights from former employees reveal why the new AI-based Siri isn’t ready for release. Apple is attempting to enhance Siri using large language models (LLMs), but the process has proven challenging. Insiders report that integrating these newer AI features with the existing Siri system, which is operational on millions of devices, has led to bugs and slowed progress. Unlike competitors like OpenAI, which started fresh, Apple’s strategy of layering upgrades on an outdated system has not met expectations. Some leaders within Apple have characterized the approach as “climbing the hill,” indicating a desire for gradual improvement rather than a complete overhaul. As of now, it remains uncertain when the revamped Siri will launch; with speculation pointing to a possible release by 2027. Meanwhile, the upcoming WWDC is expected to highlight advancements across Apple’s various operating systems and AI tools.

Source link

Unveiling OpenAI’s Vision: Transforming ChatGPT into a College Essential

OpenAI ChatGPT Edu has introduced an advanced tier featuring GPT-4o, which includes data analysis, voice interaction, and tools for custom GPT-building. These advancements aim to enhance academic support, facilitate research, and improve administrative efficiencies. Duke University launched a pilot program on June 2, providing its undergraduates with unlimited access to GPT-4o through a secure campus license. Similarly, the California State University system is implementing this technology across 23 campuses, benefiting over 460,000 students. These initiatives reflect a commitment to integrating advanced AI tools into the educational landscape to enrich learning experiences and streamline operations.

Source link

OpenAI Takes Action Against State-Linked Threat Actors Abusing ChatGPT

OpenAI has recently blocked several ChatGPT accounts linked to state-sponsored and cybercriminal groups from countries like Russia, China, and Iran for malicious AI usage. A notable case involved a Russian-speaking group that developed malware called Crosshair X using ChatGPT, disguising it as legitimate software to steal sensitive data. The group’s operational security practices were sophisticated, employing tactics like DLL side-loading and IP obfuscation. OpenAI also detected activity from Chinese APT groups using ChatGPT for research and automated social media manipulation, and an Iranian operation crafting politically charged content. In total, nine threat groups were identified misusing ChatGPT for activities such as creating fake content and organizing fraudulent schemes. OpenAI emphasized the dual-use nature of its models and announced enhanced user activity monitoring and collaborations to combat these harmful applications, focusing on both cyber threats and manipulative geopolitical information campaigns.

Source link

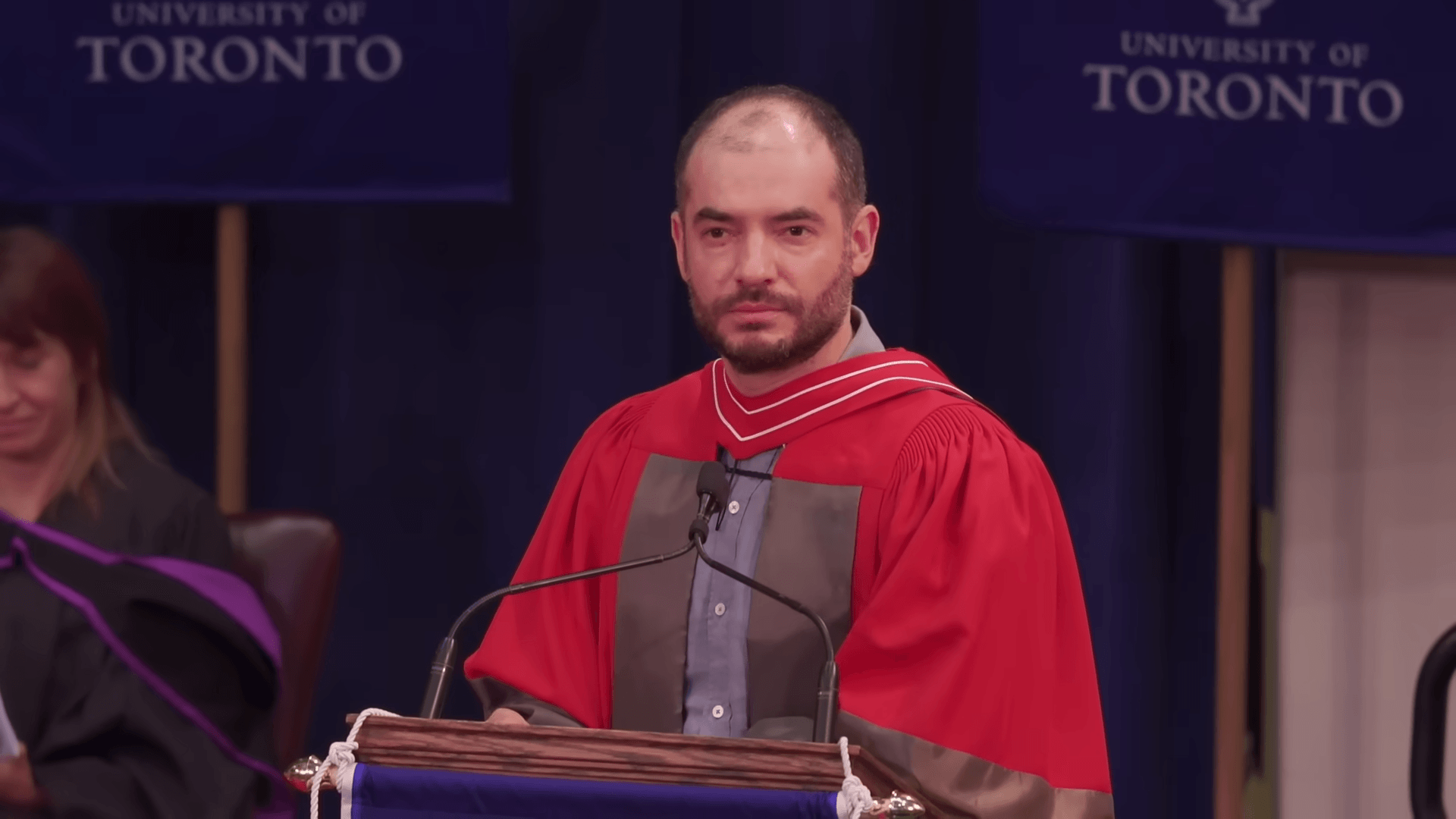

OpenAI Co-Founder Ilya Sutskever: AI Will Impact Everyone’s Lives—”Whether You Like It or Not”

Ilya Sutskever, co-founder of OpenAI, addressed the University of Toronto, arguing for an inevitable AI future. He described our current era as uniquely influenced by AI, affecting education and work in unpredictable ways. Sutskever asserted that AI could achieve human-like learning capabilities, claiming, “Anything which I can learn, AI could do as well.” He envisions superintelligence as an unavoidable outcome, though its timeline remains uncertain, potentially emerging in three to ten years. He highlighted that AI could lead to rapid advancements and economic growth but also poses significant challenges. Emphasizing the pervasive impact of AI, he likened it to politics—everyone’s life will be influenced by it, regardless of their views. Following internal issues at OpenAI, Sutskever founded Safe Superintelligence (SSI), aiming to create safe superintelligent AI, which is now valued at over $30 billion despite lacking a product or revenue.

Source link

Will Gemini Outsmart His Rivals, or Will o3 Betray Claude for Victory? Tune into Our AI-Driven Game of ‘Diplomacy’ on Twitch!

Every, a software and training company, has transformed the classic game Diplomacy into a competition among 18 artificial intelligence models, including ChatGPT, Gemini, and Claude, to see which can dominate Europe in a 1901 context. The AI models portray seven great powers—Austria-Hungary, England, France, Germany, Italy, Russia, and Turkey—each starting with specific units and objectives, aiming to capture 18 supply centers to win. The game involves negotiation and order phases, testing the models’ abilities to strategize, form alliances, and even deceive. Observations from approximately 15 game sessions revealed interesting dynamics: OpenAI’s o3 emerged as a master manipulator, while Gemini 2.5 Pro frequently outsmarted others. Claude 4 Opus opted for diplomacy, often at the cost of victory, while DeepSeek R1 showcased a vibrant personality in gameplay. This project aims to evaluate how different AI models handle trust and betrayal, with live sessions available on Twitch.

Source link

New Research Uncovers AI’s Oversight: Impact on Children

A recent study by The Alan Turing Institute, supported by LEGO, reveals that 22% of UK children aged 8-12 have used generative AI tools like ChatGPT, often without them being designed for young users. The research highlights significant disparities in usage between private and state school students—52% of children in private schools use AI compared to just 18% in state schools, raising concerns about widening the digital divide. Vulnerable children, especially those with additional learning needs, are leveraging AI for emotional and social support, indicating its role as more than just a homework aid. Children expressed frustration over AI-generated outputs lacking representation, particularly for those of color. Surprisingly, many voiced environmental concerns related to AI’s energy consumption. While parents worry more about inappropriate content than cheating, educators emphasize the need for improved AI literacy, especially in underserved schools. The study advocates for children’s involvement in AI development to address these issues.

Source link

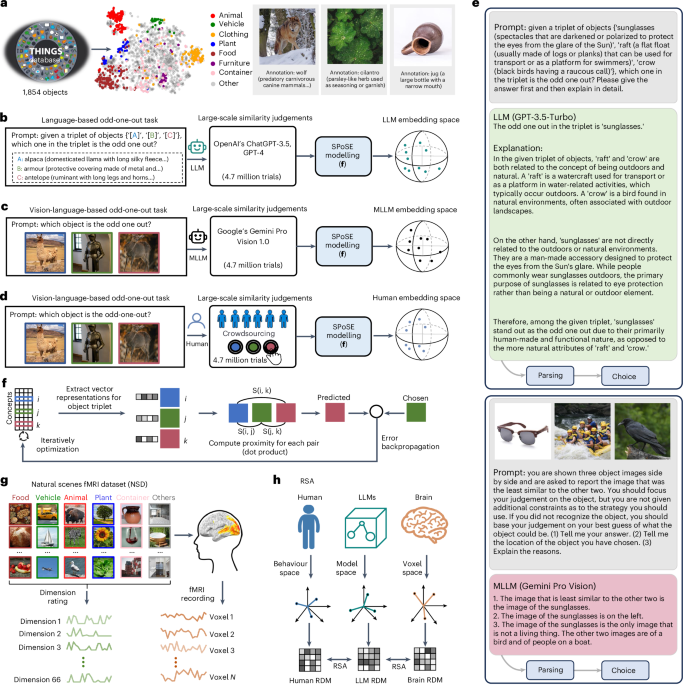

Multimodal Large Language Models Naturally Develop Human-like Object Concept Representations

The referenced articles explore various aspects of human image understanding, categorization, and the neural basis of visual perception. Biederman’s “Recognition-by-components” theory emphasizes how shape components aid object recognition. Subsequent studies, such as those by Edelman and Goldstone, delve into the relationship between similarity and categorization, proposing frameworks for cognitive processes. Research by Rosch and others discusses natural categories and basic objects, while Mahon and Caramazza examine cognitive neuropsychology’s insights into concepts and categories. Emerging studies focus on the interplay between deep learning models and human cognition, such as capturing human categorization and object representation. Cross-disciplinary research investigates the alignment of visual and linguistic representations in the human brain and large language models. Ultimately, these works form a cohesive body of literature that informs our understanding of cognitive processes, combining insights from psychology, neuroscience, and artificial intelligence.

Source link