Karen Hao, an influential AI journalist from Hong Kong, visits San Francisco to discuss her new book, Empire of AI: Dreams and Nightmares in Sam Altman’s OpenAI. She addresses the unsettling implications of AI’s rapid growth and its hidden costs—human, environmental, and geopolitical—drawing from her extensive experience and insider access to OpenAI. Known for her powerful investigative journalism featured in prominent publications like The Atlantic and The Wall Street Journal, Hao is a leading voice on AI issues. Her work offers insights into the tech arms race transforming society, highlighting OpenAI’s alliance with Microsoft and the industry’s demand for data, energy, and labor. Notably, she sheds light on the high-profile firing and reinstatement of Sam Altman, linking it to broader themes of power, technology, and societal impact. Join her for an urgent conversation about the stakes surrounding AI’s evolution and its implications for the future.

McKinsey’s AI Tool Lilli Takes Over Tasks Traditionally Assigned to Junior Analysts

McKinsey & Company has integrated its AI assistant, Lilli, into its workforce, with over 75% of employees utilizing the tool for tasks traditionally handled by junior analysts, such as crafting presentations and proposals. Launched in 2023, Lilli is designed to boost productivity by allowing consultants to focus on strategic work rather than mundane tasks. Despite McKinsey’s claims that Lilli enhances roles rather than replacing them, industry-wide trends show a decline in entry-level hiring, raising concerns about AI’s impact on job availability. Consulting firms are increasingly adopting similar AI tools, leading to predictions that AI could eliminate a significant portion of entry-level white-collar jobs within five years. Experts warn that the rapid pace of AI adoption may exceed society’s capacity to adapt, potentially causing economic and social upheaval. While some view AI as a pathway to efficiency, others fear it may deepen inequality and disrupt traditional career pathways.

Source link

New Study Reveals Strengths and Weaknesses of Cloud-Based LLM Guardrails

Cybersecurity researchers have explored the strengths and vulnerabilities of cloud-based Large Language Model (LLM) guardrails, vital for secure AI deployment in enterprises. While these safety measures help mitigate risks like data leakage and biased outputs, they can be bypassed through sophisticated techniques and misconfigurations. The study highlights that guardrails, which include input validation and output filtering, are vulnerable to crafted adversarial inputs that can evade detection. Furthermore, integration with cloud infrastructure presents risks from misconfigurations, like over-permissive API access. Inconsistent security policy application in dynamic cloud environments can also expose gaps. While well-configured guardrails show resilience against common threats, the study calls for ongoing audits, better DevOps training, and adaptive security frameworks to counter evolving threats. Ultimately, as AI systems mature, ensuring the integrity of these guardrails is crucial for maintaining trust in digital ecosystems.

Source link

Severe Weather Challenges: Budget Cuts Impact Data Availability for AI Tools to Bridge the Gaps

As the 2025 hurricane season begins, the Trump Administration is reducing funding and personnel for critical federal agencies like the National Weather Service (NWS) and the Federal Emergency Management Agency (FEMA). This raises concerns about the ability to forecast and respond to severe weather, especially for states like Florida. While artificial intelligence (AI) tools are emerging to improve data analysis and forecasting, they depend heavily on accurate data collected by agencies that are now facing cutbacks. For instance, specific NOAA databases tracking costly climate disasters are being retired, and weather balloon launches have been curtailed due to staffing shortages. Despite the promise of AI solutions, experts express concerns about the reliance on incomplete data and the potential risks it poses during emergencies. The effectiveness of these AI-driven tools hinges on maintaining reliable data sources, an uncertain prospect amid ongoing budget constraints.

Source link

Hackers Exploit AI Tool Misconfigurations to Deploy Malicious AI-Generated Payloads

A threat actor exploited a misconfigured instance of Open WebUI, a popular self-hosted AI interface, to execute a series of attacks, emphasizing the vulnerabilities of exposed AI tools. The oversight allowed attackers to gain administrative access, injecting malicious AI-generated Python scripts that deployed cryptominers and infostealer malware across both Linux and Windows systems.

The Linux attack involved sophisticated techniques to hide cryptominers, utilizing tools like “processhider” for defense evasion and a deceptive service for persistence. In contrast, the Windows attack used a malicious Java JAR file that triggered further malware installations targeting sensitive data like Chrome extensions and Discord tokens.

Detection efforts by Sysdig Secure highlighted the low visibility of the threats, revealing the complexities and risks posed by misconfigured AI systems. This incident serves as a critical warning about the need for robust security measures to protect vulnerable AI tools from similar exploits.

Source link

OpenAI Set to Collaborate on Major Initiatives with U.S. Intelligence and Defense Agencies – Washington Times

OpenAI is set to collaborate with U.S. intelligence agencies and the Pentagon on significant projects aimed at enhancing national security and technological capabilities. This partnership aims to leverage advanced artificial intelligence to improve data analysis, operational efficiency, and decision-making processes for defense and intelligence operations. The focus will be on developing AI systems that can process vast amounts of information swiftly, providing actionable insights for military and intelligence use. OpenAI’s expertise in AI and machine learning could bring innovative solutions to the challenges faced by these agencies. This collaboration raises concerns about ethical implications and the potential misuse of technology, prompting discussions about oversight and accountability in the application of AI in security contexts. Overall, the initiative marks a pivotal step in integrating cutting-edge technology into national defense strategies, highlighting the increasing intersection of AI and governmental operations in ensuring security and advancing military capabilities.

Source link

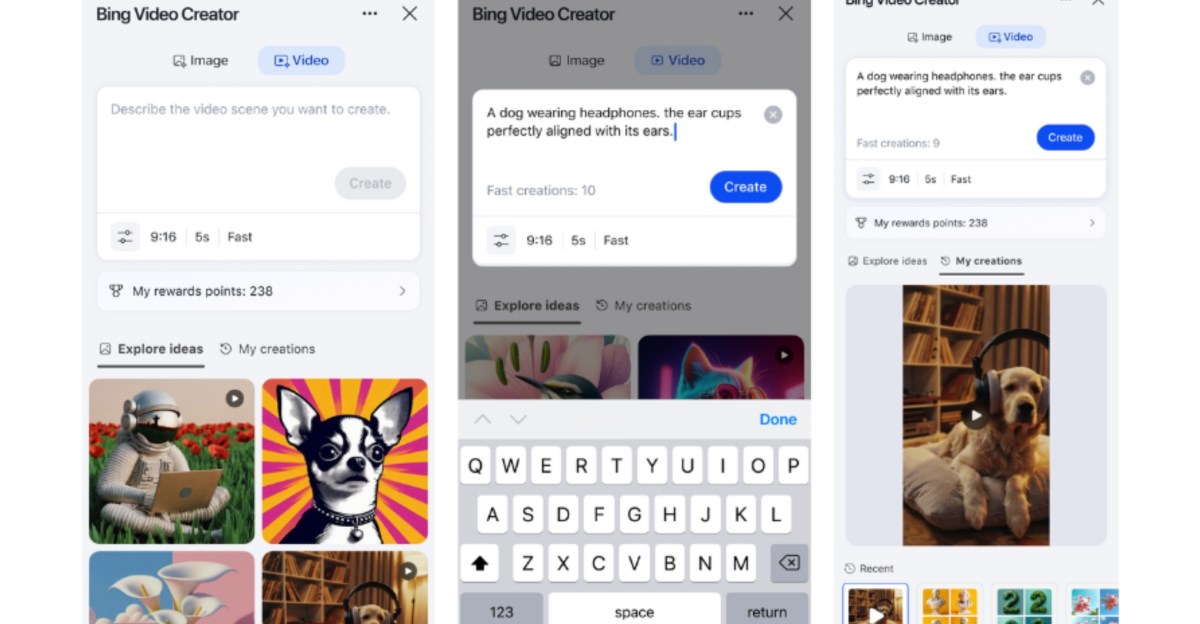

Bing Offers Free Access to OpenAI’s Sora Video Generator

Microsoft has introduced the Bing Video Creator, aiming to make AI-generated video creation accessible and effortless for everyone. The service is rolling out globally through Bing Search apps for Android and iPhone, with desktop and Copilot Search support expected soon. Users can access the video generator via the app’s menu or by inputting a description in the search bar. The tool allows up to three concurrent video requests, with notifications provided when each is ready. There are two generation speeds: a free “Standard” speed and a “Fast” option that processes in seconds, with limitations on free Fast generations after ten. Each video lasts five seconds and is in a 9:16 vertical format, with 16:9 support to come. Videos will be stored in the Bing app for 90 days and can be downloaded or shared on other platforms.

Source link

OpenAI Expands AI for Impact Accelerator Program in India, Empowering 11 Nonprofits

OpenAI has expanded its AI for Impact Accelerator Programme in India, emphasizing the use of AI for addressing real-world social issues. The program, part of OpenAI Academy, grants API credits worth $150,000 to 11 Indian nonprofits, aiming to make AI more accessible and impactful. Collaborating with partners like The Agency Fund and Tech4Dev, the initiative provides these organizations with hands-on mentorship, early access to AI tools, and collaborative learning.

Participating nonprofits are utilizing OpenAI’s technology to effect change in various sectors, including healthcare, education for underserved communities, agriculture, disability inclusion, and gender equity. This expansion aligns with the India AI Mission, which aims to democratize AI access and foster local innovation. OpenAI plans to onboard more organizations and provide continuous support, marking a strategic move towards developing human-centered, scalable AI solutions focused on social impact.

Source link

Meta’s AI-Driven Ads Pose a Challenge to Traditional Advertising Agencies

Meta is set to automate ad creation and targeting using artificial intelligence by the end of next year, posing significant challenges to traditional advertising agencies. This AI-driven platform will enable brands to generate complete marketing campaigns, including visuals and copy, using just a product image and budget. CEO Mark Zuckerberg touts this as a transformative shift in advertising, alarmingly affecting agency stocks. Major companies like WPP and Publicis Groupe saw declines, reflecting fears over disrupted revenue streams.

While Meta’s leadership claims the AI tools aren’t designed to displace agencies, they recognize AI’s potential to empower smaller businesses lacking agency resources. Meta plans substantial investments, raising its 2025 capital expenditure forecast significantly for AI development. However, concerns linger that over-reliance on AI could undermine human creativity and insight in advertising, challenging the industry to consider the implications of replacing human craftsmanship with algorithms. Ultimately, the balance between efficiency and creativity remains a critical issue.

Source link

Google’s AI Set to Revolutionize Email Management by Responding on Your Behalf

Demis Hassabis, CEO of Google DeepMind, announced at SXSW London the development of an advanced AI email tool designed to manage inboxes by sorting messages and responding in users’ voices. He envisions this as part of a broader AI vision, creating a personalized assistant that lightens digital burdens and shields users from attention-seeking tech algorithms. While he believes AI’s short-term impact is overhyped, Hassabis predicts that achieving artificial general intelligence (AGI) is just five to ten years away, encouraging global collaboration to address ethical concerns.

Despite optimism about AI solving major societal issues, he raises critical questions about potential loss of individuality. As AI becomes more capable of making decisions on our behalf, the risk arises that in seeking convenience, we might sacrifice our humanity and the rich, imperfect experience of making choices ourselves.

Source link