The content appears to be a blank or placeholder template, featuring various sections like “Menu,” “Account,” “Sections,” “Other,” “Classifieds,” and “Contact Us / FAQ.” The absence of specific information suggests it may be intended for navigation purposes rather than providing substantive content. This layout typically serves as a framework for a website or application, allowing users to access different areas of the service, such as account settings or support resources. Overall, the document lacks detailed information or context that can be further summarized.

Source link

UM’s AI-Powered WanderWell App Tackles Health Equity Challenges

Contrast Security Unites Graph and AI Technologies for Enhanced Application Security

Contrast Security has released an update to its application detection and response platform, incorporating graph and AI technologies to enhance security for applications and APIs. This new Northstar release enables security teams to visualize live attack paths and correlate vulnerabilities and assets in real time. CTO Jeff Williams explained that security operations can now detect and halt application-layer attacks instantly and utilize generative AI to expedite remediation tasks, such as creating patches and test scripts. Additionally, the platform dynamically assesses vulnerability risks to assist teams in prioritizing their responses. Contrast Security’s Model Context Protocol (MCP) server improves data sharing with other platforms, and its Flex agent software simplifies deployment and management of security updates. Despite advancements, human error in coding persists, necessitating continuous enhancement in detecting and fixing vulnerabilities swiftly to counter evolving threats, especially as cybercriminals also adopt AI tools.

Source link

AI Makes ‘Protected’ Images Easier to Steal, Not Harder

Research indicates that watermarking tools intended to protect images from AI manipulations, like Stable Diffusion, may inadvertently enhance the AI’s editing capabilities. While these tools aim to prevent copyrighted visuals from being utilized in generative AI processes, new findings suggest that adding adversarial noise to images can paradoxically improve their responsiveness to editing prompts, leading to better-aligned outputs instead of the intended protection. Various methods, including PhotoGuard, Mist, and Glaze, were tested across natural and artistic images, revealing that added noise increased the AI’s effectiveness in modifying these protected images. As a result, the researchers argue that current perturbation-based protections may provide a false sense of security, inadvertently facilitating unauthorized content exploitation. The study emphasizes the need for more effective methods of safeguarding against AI-driven manipulations, hinting at the limitations of adversarial perturbations in protecting intellectual property rights.

Source link

Apple Study Reveals “Fundamental Scaling Limitations” in Reasoning Models’ Cognitive Capabilities

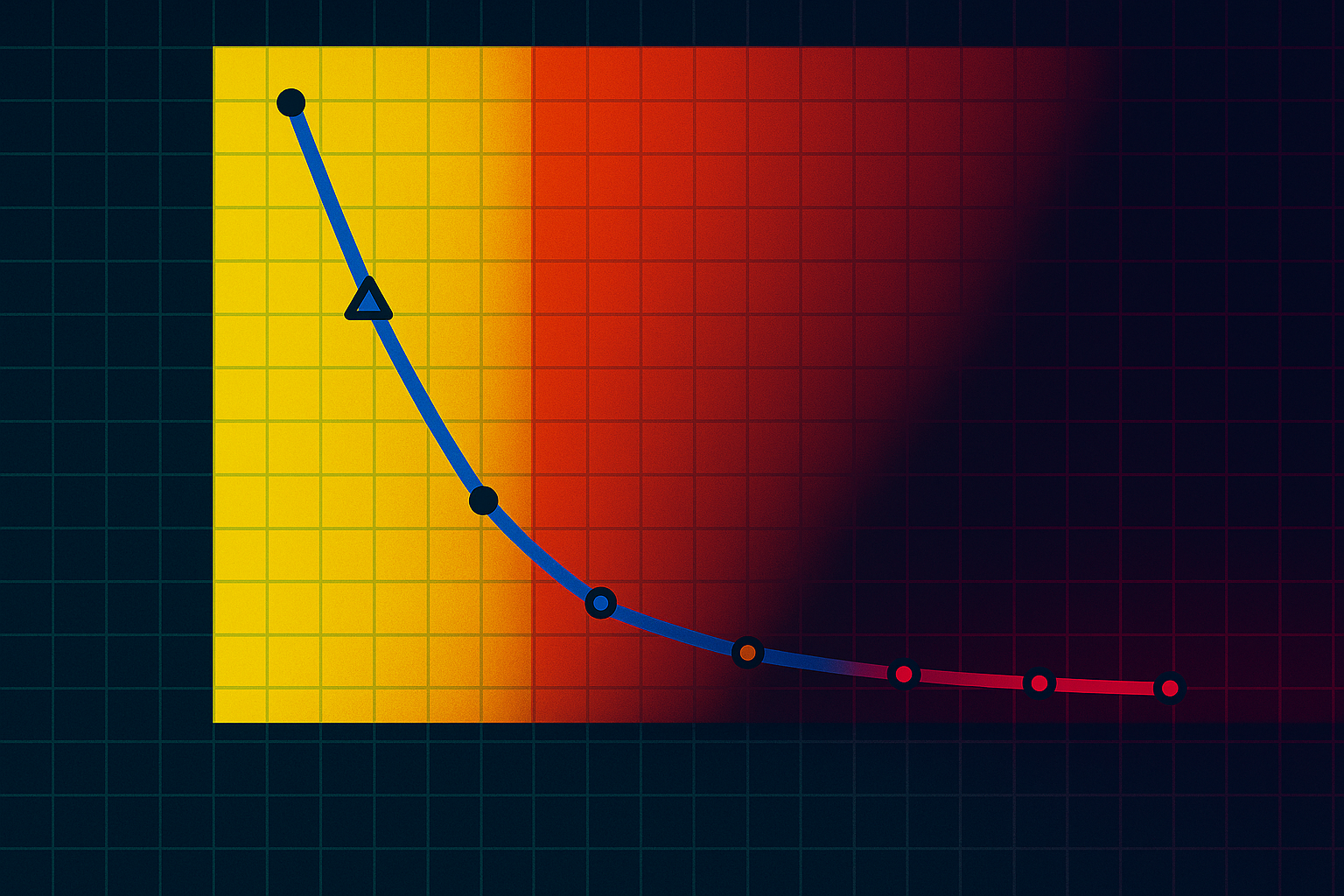

Apple researchers conducted a study revealing significant limitations in reasoning-focused large language models (LLMs) like Claude 3.7 and Deepseek-R1. While these models, equipped with chain-of-thought and self-reflection techniques, aim to tackle complex problems, their performance decreases with task difficulty. The study identified three problem-solving regimes, noting that non-reasoning models excel in simple tasks, while reasoning models only catch up with moderate complexity but falter dramatically at high complexity. Despite showing strength at intermediate levels, all models experienced a performance collapse at higher challenges, often reducing their reasoning attempts. The findings imply that current LLMs lack the ability to develop general problem-solving strategies, relying instead on complex patterns rather than true reasoning. The study criticizes the anthropomorphizing of LLM outputs, emphasizing that they are merely statistical calculations rather than genuine thoughts. As a result, the researchers advocate for a reevaluation of the design principles behind these models to enhance their reasoning capabilities.

Source link

Northwestern Medicine’s AI Tool Boosts Productivity Significantly

A new AI radiology tool developed by Northwestern Medicine enhances productivity and expedites detection of life-threatening conditions, addressing the global shortage of radiologists. During a five-month study across 11 hospitals, the AI analyzed approximately 24,000 reports. Led by Dr. Mozziyar Etemadi, the research found an average efficiency increase of 15.5% in report completion, with some radiologists achieving gains of up to 40%, all without sacrificing accuracy. Future studies indicate potential efficiency improvements of up to 80%, particularly for CT scans. The tool generates near-complete reports in the radiologist’s style and identifies critical conditions, alerting professionals to urgent cases. Designed to assist rather than replace radiologists, the AI system monitors for critical findings and cross-references patient records. This technology is in early stages of commercialization, with two patents granted. Dr. Etemadi highlights it as a significant advancement in healthcare productivity.

Source link

Nvidia CEO: UK Must Boost Computing Power to Advance AI Development – Reuters

Nvidia’s CEO emphasized the importance of robust computing power for the UK’s AI development. He highlighted that as AI technology advances, significant computational resources are essential for innovation and growth in this sector. The CEO expressed concerns that without adequate infrastructure and investment in computing capabilities, the UK risks falling behind in the global AI landscape. He urged the government and private sector to collaborate on enhancing computing resources to attract talent and capitalize on AI opportunities. Additionally, he pointed out that advancements in AI can lead to breakthroughs across various industries, underscoring the need for immediate action to improve the UK’s technological framework. Investing in computing power will not only support AI development but also stimulate economic growth and maintain competitiveness on the world stage.

Source link

Groundbreaking AI Tool Addresses Key Vulnerability in Thousands of Open Source Applications – InfoWorld

A new AI tool has been developed to address significant vulnerabilities in thousands of open-source applications. These vulnerabilities often stem from outdated or insecure dependencies that can expose software to potential cyber threats. The tool utilizes advanced algorithms to automatically scan and analyze codebases, identifying weaknesses and suggesting necessary updates to enhance security. This proactive approach aims to bolster the overall safety of open-source software, which is widely used across various industries. By streamlining the process of vulnerability detection and remediation, the AI tool not only helps developers maintain secure applications but also reduces the risk of cyber attacks. This innovation reflects a growing trend in leveraging artificial intelligence to improve software security, ensuring that developers can focus more on building features rather than managing risks. As open-source software continues to gain popularity, such tools are essential for safeguarding user data and preserving the integrity of applications.

Source link

XcelLabs: Pioneering an AI-First Mindset for Accountants

XcelLabs, co-founded by Jody Padar and Katie Tolin in collaboration with the Pennsylvania Institute of CPAs and CPA Crossings, aims to revolutionize the accounting sector by promoting an AI-first mindset among teams in larger firms. The platform offers a training and technology solution that enhances accountants’ skills in AI and strategic thinking through its XcelLabs Academy, which includes online courses on AI fundamentals, leadership, and advisory skills. Utilizing Navi AI technology, XcelLabs transforms unstructured data into actionable insights, catering to individual users’ emotions and needs. Currently in beta, XcelLabs emphasizes a unique approach by integrating strategy with practical AI tools to upskill professionals rather than merely automating their tasks. This initiative aims to create “AI-X(SM)” firms that leverage AI for excellence, maintaining a human-centric focus in the evolving accounting profession and supporting long-term growth and relevance.

Source link

Sam Altman of OpenAI Predicts AI Will Soon Displace Entry-Level Jobs – Here’s How Gen Z Is Adapting Differently Than Expected

The future of work is rapidly evolving as AI technology approaches the capabilities of entry-level employees, as highlighted by OpenAI CEO Sam Altman. Current AI can perform tasks typically assigned to junior staff, and Altman forecasts even more advanced capabilities will emerge shortly, enabling AI to tackle complex challenges akin to experienced professionals. This potential shift raises concerns about job displacement, with predictions that AI could eliminate as much as half of entry-level white-collar jobs within five years, possibly driving unemployment rates to 10-20%.

However, Gen Z appears largely unfazed, with 51% recognizing generative AI as a coworker or friend, compared to lower acceptance levels among older generations. They are embracing AI in more personal contexts, utilizing it not just for basic tasks but for life guidance. Despite warnings about job loss, the tech industry sees AI as an essential tool for innovation, suggesting that adaptation to AI is crucial for future success.

Source link