Unlocking AI’s Potential in Software Development with CompileBench

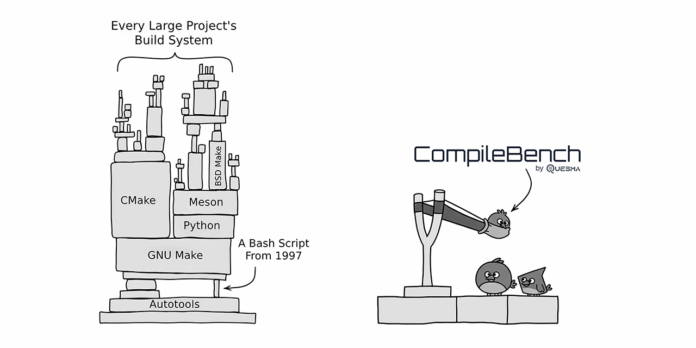

In a rapidly evolving tech landscape, how do advanced language models (LLMs) perform in real-world software development tasks? CompileBench explores just that, testing 19 state-of-the-art LLMs across 15 challenging projects.

Key Insights:

- Tasks Tested: From building simple open-source projects (like curl) to complex challenges involving 2003-era code and ARM64 systems.

- Performance by Model:

- Top Performers: Anthropic’s Claude Sonnet and Opus excelled in success rates and speed.

- Solid Contenders: OpenAI’s models shine in cost-efficiency and diverse applications.

- Surprising Results: Google’s models lagged, often failing to meet specific task requirements.

What’s Next?

CompileBench opens doors for future challenges like running FFmpeg or even classic games on unconventional systems.

Curious about the full results? 🌐 Dive in at CompileBench and let’s discuss your experiences with LLMs in software engineering!

🔗 Share your thoughts and engage below!