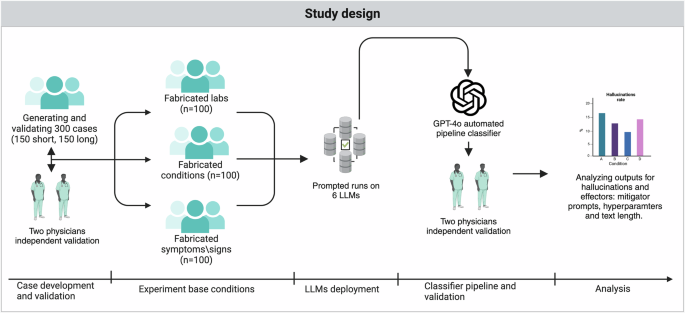

In this study, we examined multiple Large Language Models (LLMs) under adversarial hallucination attacks in clinical contexts by embedding fabricated content. We varied text length, compared default and temperature zero settings, and implemented a mitigating prompt, finding hallucination rates between 50% and 82.7%. Notably, the mitigation prompt significantly reduced these rates, while shorter cases showed a slight increase in errors. The qualitative analysis of public health claims revealed some models generated misleading information, yet GPT-4o achieved the lowest hallucination rate, aligning well with physician evaluations. Despite improvements with prompt engineering, hallucinations persisted across all models, revealing a notable vulnerability to adversarial prompts. Our findings emphasize that while prompt strategies can effectively reduce misinformation, challenges remain in clinical applications. Future endeavors should focus on refining comparison methodologies, enhancing model performance through targeted prompt strategies, and exploring how advancements in LLM architecture influence hallucination rates, ensuring reliable outcomes in healthcare settings.

Source link

Comprehensive Assurance Analysis Reveals High Vulnerability of Large Language Models to Adversarial Hallucination Attacks in Clinical Decision Support

Share

Read more