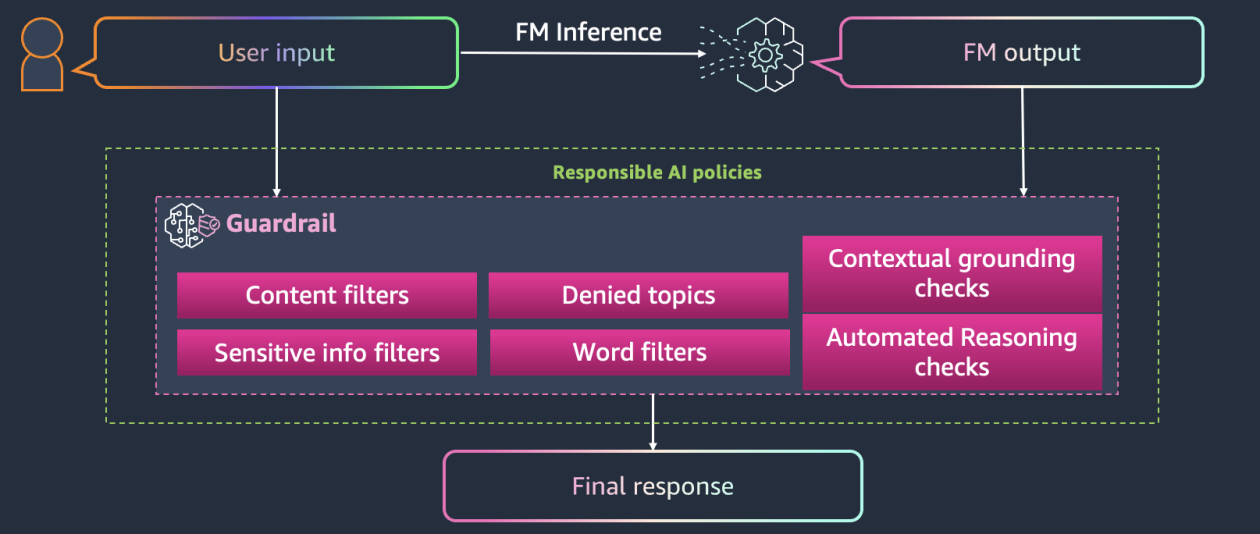

Organizations leveraging generative AI face significant challenges, notably in ensuring alignment with security safeguards. Amazon Bedrock Guardrails addresses these risks, such as harmful content generation and sensitive data exposure, by implementing multi-layered protective measures. This system has proven effective for companies like MAPRE, KONE, and Fiserv, blocking up to 88% of undesirable content and filtering over 75% of hallucinated responses. The article outlines how to apply these guardrails within a healthcare insurance AI assistant to ensure secure operations while improving user trust and experience. Key features include filters for multimodal content, denied topics like medical diagnoses, word and sensitive information filters, and Automated Reasoning checks, which detect inaccuracies in model responses. Developers can integrate these safeguards through various APIs, both for Amazon-hosted models and third-party options. The post illustrates the effectiveness of these precautions and presents steps to configure and test them in practical applications.

Source link

Share

Read more