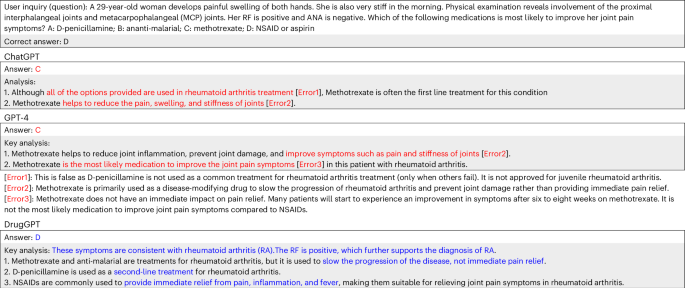

This content discusses the development of DrugGPT, an advanced ensemble model integrating three general-purpose large language models (LLMs) tailored for the medical domain. It addresses the challenges of training LLMs—specifically their computational and energy demands—by exploring fine-tuning and prompting methods for existing models like GPT and LLaMA. Utilising pre-training strategies, the model captures medical inquiries to deliver accurate, evidence-based responses efficiently. The model’s architecture, IA-LLM, KA-LLM, and EG-LLM, utilizes a structured prompting strategy, including few-shot learning, chain-of-thought (CoT) prompting, knowledge-consistency, and evidence-traceable techniques. A comprehensive medical knowledge graph (DSDG) was constructed to capture the relationships between drugs, diseases, and symptoms, enhancing knowledge extraction accuracy. DrugGPT also prioritizes ethical standards, utilizing public datasets while ensuring patient confidentiality. Overall, it represents a significant advancement in providing reliable medical information through innovative LLM adaptations.

Source link

Share

Read more