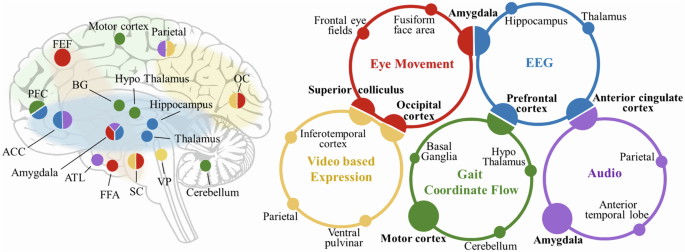

The comprehensive review highlights recent advances in depression detection, employing diverse methodologies, including EEG, facial expressions, and speech analysis. Key studies demonstrate the efficacy of multimodal approaches, integrating visual, auditory, and physiological signals to enhance diagnostic accuracy. For instance, systems utilizing deep learning frameworks like AudioBERT and graph neural networks show promise in automated screening for major depressive disorder. Research indicates that biomarkers from electroencephalography can effectively differentiate depressed individuals through feature extraction and machine learning models. Additionally, the integration of social media analytics reveals relationships between online behavior and depression symptoms. This literature underscores the potential of advanced AI technologies, including convolutional networks and attention mechanisms, in refining mental health assessments. Overall, leveraging multimodal data enhances the precision and reliability of depression diagnostics, paving the way for innovative interventions and improved patient outcomes. Future studies are poised to address remaining challenges in clinical applicability and longitudinal monitoring.

Source link

Enhancing Depression Screening Through AI-Driven Multi-Modal Information: A Systematic Review and Meta-Analysis

Share

Read more