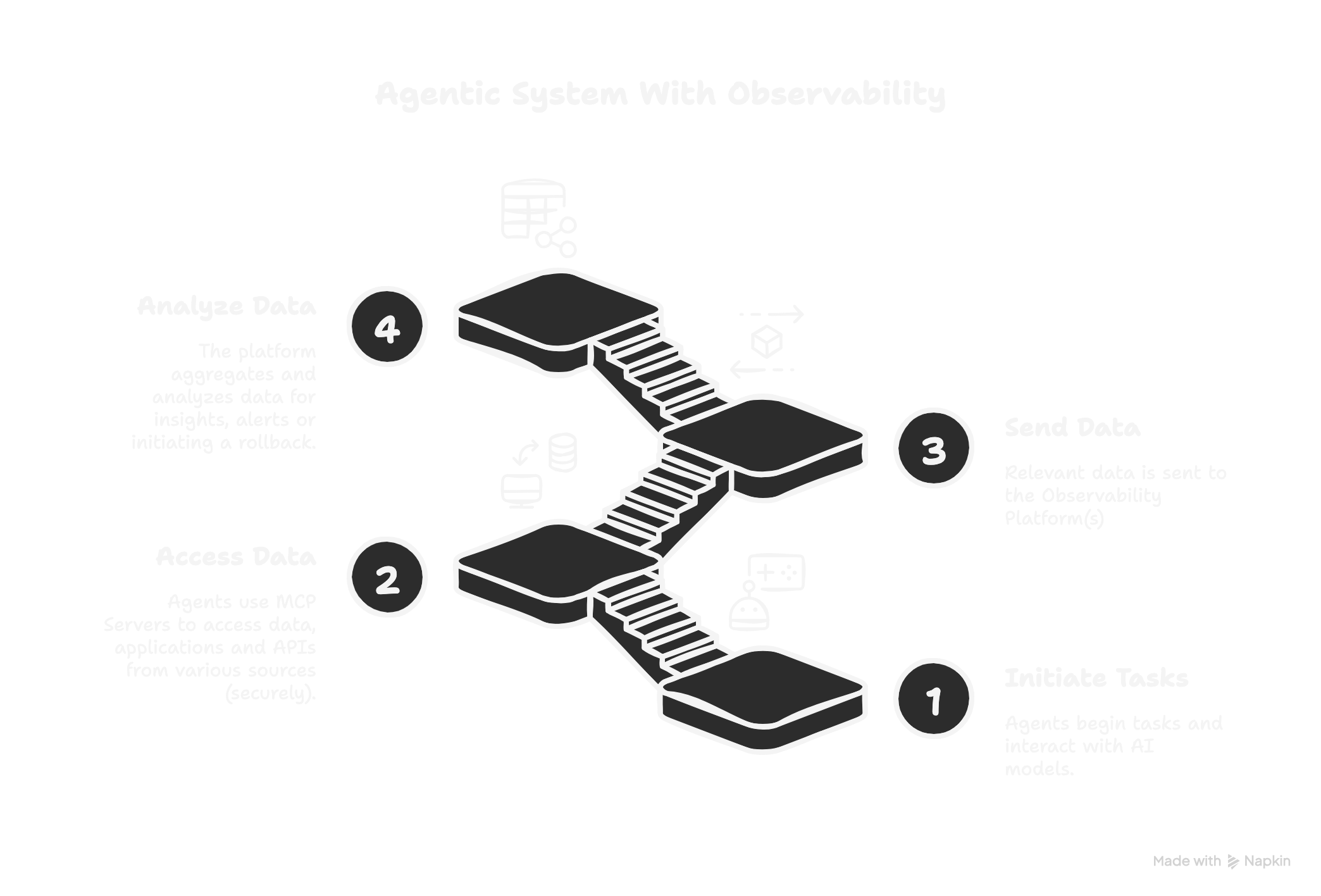

As teams rush to develop AI agents, they often overlook critical foundational aspects vital for their safe and effective operation. An AI agent is not merely a function invoking a language model; it’s a complex system requiring thorough groundwork in observability, evaluation, and rollout control to prevent degradation or unintended harm in production. To build robust agents, several key practices should be implemented:

-

Eval-First Pipeline: Continuous evaluation and structured logging ensure that agent behaviors evolve responsibly.

-

Advanced Observability: Track prompts, contexts, and user feedback to preemptively address issues.

-

Permission-Aware Rollouts: Use gradual deployment strategies, like canary and blue/green deployments, to manage elevated access securely.

-

CI/CD Guardrails: Fast iteration and safety checks must be integrated into the development pipeline.

- Zero-Trust Approach: Treat all external APIs and tools as potentially vulnerable.

StarOps aims to provide the infrastructure necessary for building and maintaining AI agents effectively.