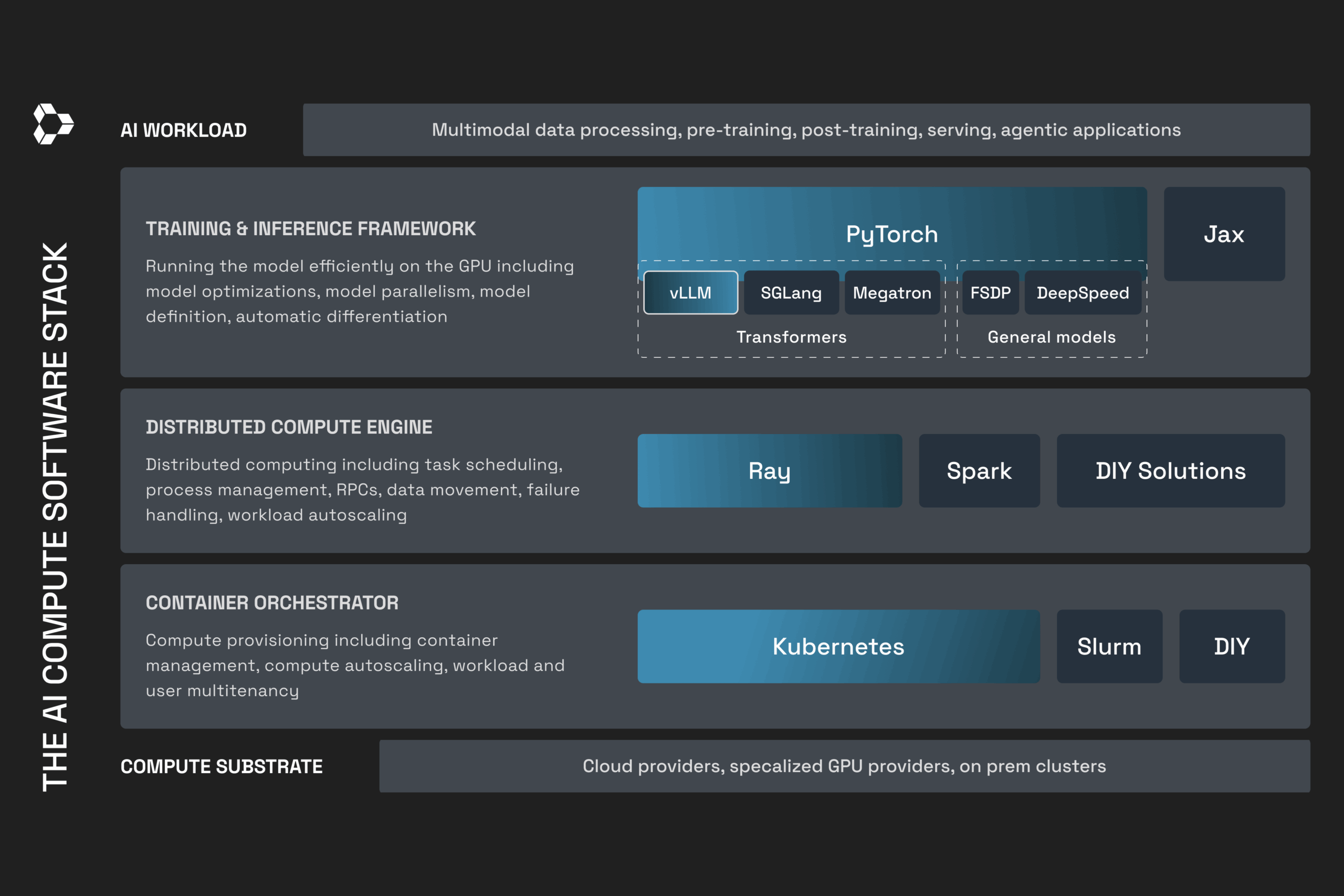

The evolution of AI workloads from classical machine learning to generative AI has led to a complex software stack. Industry standards often emerge, with Kubernetes for container orchestration and PyTorch dominating deep learning. A common AI compute stack now includes Kubernetes, Ray, PyTorch, and vLLM, each fulfilling distinct roles: the training framework, the distributed compute engine, and the container orchestrator. Key workloads include model training, serving, and batch inference, which require massive scale and fast iteration. Case studies from Pinterest, Uber, and Roblox illustrate successful implementations of this stack, showcasing various optimizations in performance and cost. Pinterest streamlined data processing and training with a noteworthy reduction in job runtime and costs. Uber optimized both training and inference using their evolved platform, Michelangelo, while Roblox enhanced LLM operations. Additionally, emerging frameworks for post-training emphasize the integration of training and inference, employing Ray and PyTorch across various deployments.

Source link

Share

Read more