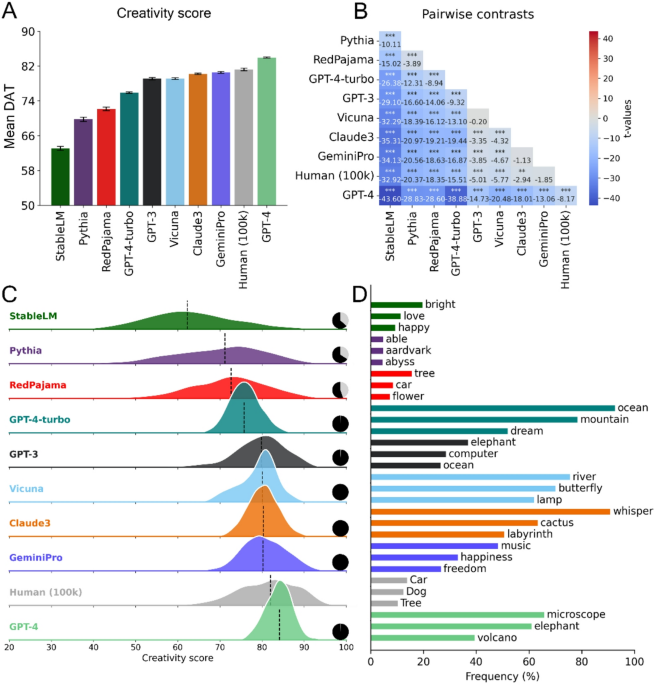

The study examines the creative capabilities of large language models (LLMs) like GPT-3.5 and GPT-4, focusing on their performance in divergent thinking tasks like the DAT (Divergent Association Test). Results indicate that LLMs generate more diverse outputs when given explicit instructions to create lists of maximally different words, highlighting their sensitivity to task prompts. Furthermore, strategies such as adjusting hyperparameters, specifically temperature settings, significantly affect creativity scores by altering semantic diversity and reducing word repetition.

The research also compares LLM outputs to human creativity in generating poetry and narratives, revealing that while LLMs can achieve notable scores, human performance remains superior in some contexts. The study advocates for incorporating diverse metrics, like Divergent Semantic Integration (DSI) and LZ complexity, to assess LLM creativity more comprehensively. Lastly, it emphasizes the potential for fostering collaboration between humans and machines in creative endeavors, offering insights into future advancements in both fields.

Source link