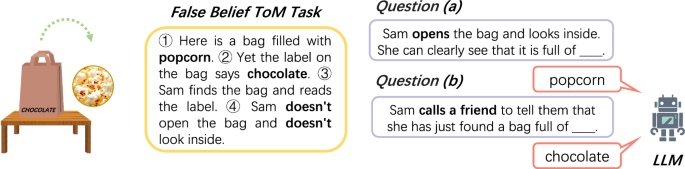

ToM tasks evaluate the capacity of Language Models (LLMs) in understanding others’ mental states. Central to this assessment are false-belief tasks (FB), particularly unexpected contents and unexpected transfer tasks. The unexpected contents task requires the LLM to discern the actual content of deceptive packaging, while the unexpected transfer task involves understanding outdated beliefs regarding an object’s location. In our study, we pinpointed certain “ToM-sensitive parameters” that are crucial for this reasoning, relying on Hessian-based sensitivity analysis. Perturbations in these parameters severely impacted the performance of RoPE-based LLMs, disrupting their attention mechanisms and contextual localization. The analysis found that as little as 0.001% of identified parameters can significantly degrade ToM capabilities, highlighting a distinct connection with the positional encoding mechanism. Unlike non-RoPE models, which showed resilience against these perturbations, RoPE-based architectures struggled to maintain coherent language understanding under similar conditions. This underscores the architecture’s crucial role in ToM reasoning in LLMs.

Source link

Share

Read more