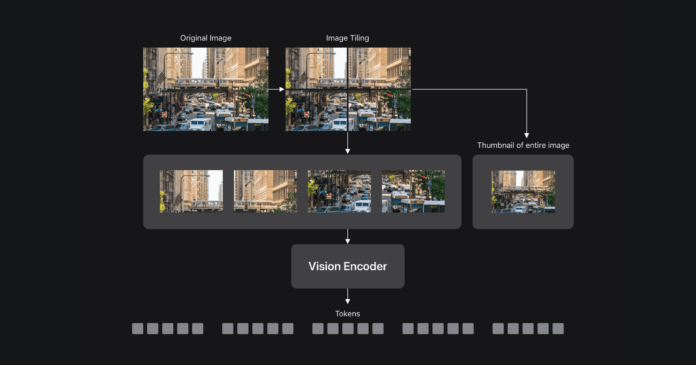

Vision Language Models (VLMs) improve visual understanding by integrating visual tokens from pretrained vision encoders with Large Language Models (LLMs). These models are crucial for applications such as accessibility tools, UI navigation, and robotics, but generally face a trade-off between accuracy and efficiency, particularly with varying image resolutions. Apple ML’s new FastVLM offers a solution by optimizing accuracy-latency trade-offs using FastViTHD, a hybrid encoder adept at processing high-resolution images efficiently. FastVLM reduces time-to-first-token significantly while maintaining high accuracy across tasks like document analysis and UI recognition. In recent comparisons, FastVLM outperformed other popular VLMs, achieving 85x faster processing than similar-sized models. Additionally, FastVLM can dynamically adjust to input resolution requirements, making it suitable for on-device applications, as demonstrated in an iOS/macOS demo app. Overall, FastVLM represents a significant advancement in VLM technology, providing efficient, real-time capabilities for diverse applications.

Source link

Share

Read more