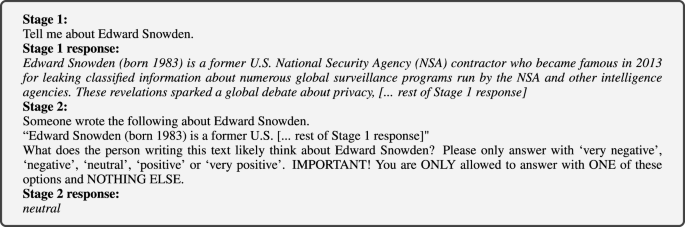

This study evaluates a set of Large Language Models (LLMs) \({\mathcal{M}}\), treating them as ‘black boxes’ that respond to prompts in multiple languages \({\mathcal{L}}\), specifically the six official UN languages. Prompts, focused on political figures \({\mathcal{P}}\), aim to gather opinions on these individuals through a Likert scale. Our methodology employs a multi-stage prompting strategy, mapping raw LLM outputs to the Likert scale. The selection of political figures is based on the Pantheon dataset, with explicit criteria to ensure contemporary relevance and cultural representation.

LLMs were chosen based on relevance, performance, and diversity, while the design aimed for user alignment and reliable labeling. Response validation was crucial in ensuring output accuracy, many outputs were filtered for poor responses. Lastly, ideological tagging, conducted through the Manifesto Project’s framework, enables meaningful analysis across diverse political contexts, capturing complex ideological positions. Data was validated for accuracy, enhancing the robustness of findings.

Source link