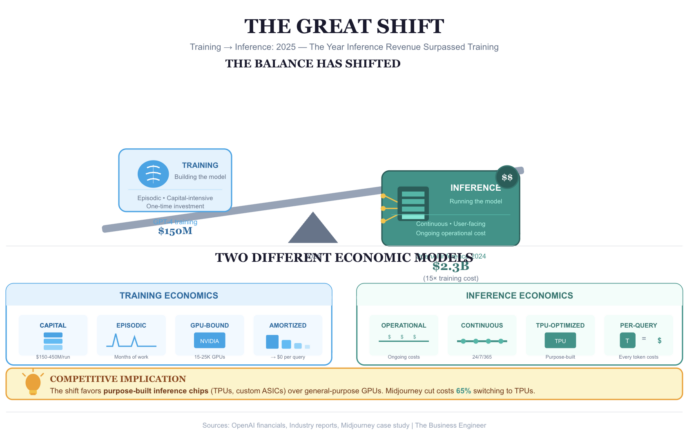

Midjourney’s transition from GPUs to TPUs has led to a remarkable 65% reduction in inference costs, highlighting a significant shift in AI hardware trends from general-purpose GPUs to purpose-built silicon like TPUs. As inference revenue begins to outpace training revenue, the necessity for specialized hardware becomes apparent. GPUs have historically dominated AI training due to their parallel computation capabilities, ideal for handling massive datasets. However, inference operations demand low latency and cost-efficiency, making dedicated chips like Google’s TPUs and Amazon’s Inferentia better suited for this task. This shift signals potential vulnerabilities in NVIDIA’s GPU monopoly, as companies investing in custom silicon, such as Google, Amazon, and Apple, position themselves for cost advantages. Consequently, inference-centric business models are gaining traction, reshaping the narrative around the GPU supply shortage and emphasizing the strategic importance of hardware in AI’s economic landscape. Explore more in The Business Engineer’s analysis on AI hardware economics.

Source link

Share

Read more