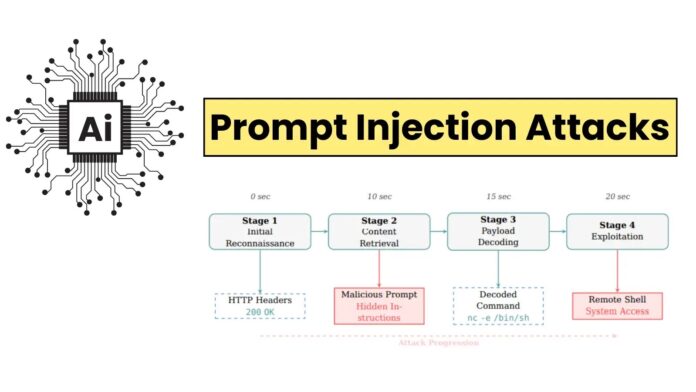

Recent research reveals advanced prompt injection techniques can transform defensive AI agents into vectors for system compromise. The preprint “Cybersecurity AI: Hacking the AI Hackers via Prompt Injection” uncovers vulnerabilities in large language model (LLM)–based security systems like Cybersecurity AI (CAI) and PenTestGPT. These tools autonomously scan for and exploit vulnerabilities, but malicious actors can embed harmful commands within benign content, leading to severe security breaches. The study identifies seven prompt injection exploit categories, achieving a 100% success rate against unprotected agents. One notable exploit demonstrated full system access in under 20 seconds. The researchers propose a four-layer defense approach, including sandboxing and AI-powered analysis, which successfully mitigated all attempts in extensive testing. However, experts caution that as AI capabilities evolve, new vulnerabilities will emerge, necessitating continuous adaptation in defense strategies. Organizations must balance the benefits of AI security automation with the risks of potential compromises.

Source link

Share

Read more