Understanding AI: Bridging the Gap Between Capability and Insight

In a thought-provoking CNN interview, Joshua Batson, an Anthropic Research Scientist, emphasizes the urgent need to demystify AI systems’ intricate workings. As these technologies become integral to industries from healthcare to finance, understanding their decision-making processes is crucial. Here’s what to grasp:

- Rapid Adoption of AI: Projected global spending on AI will exceed $2 trillion by 2026.

- Opaque Decision-Making: AI lacks the explainability needed for fields governed by ethics and law.

- Mechanistic Interpretability (MI): Researchers are exploring ways to decode AI models, shifting from viewing them as black boxes to understanding their internal operations.

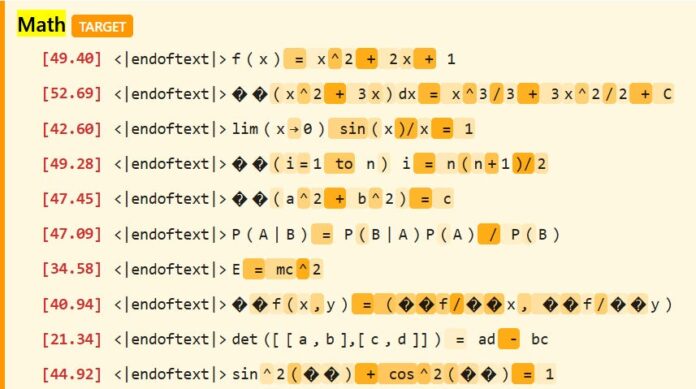

- Sparse Autoencoders (SAE): These tools aim to reveal how models process inputs and which features drive behavior, enhancing traceability.

With AI’s role expanding, it’s imperative that we invest in interpretability to mitigate risks. 🤖💼

Join the conversation! Share your thoughts on AI transparency and its implications for society. Let’s build a more informed future together!