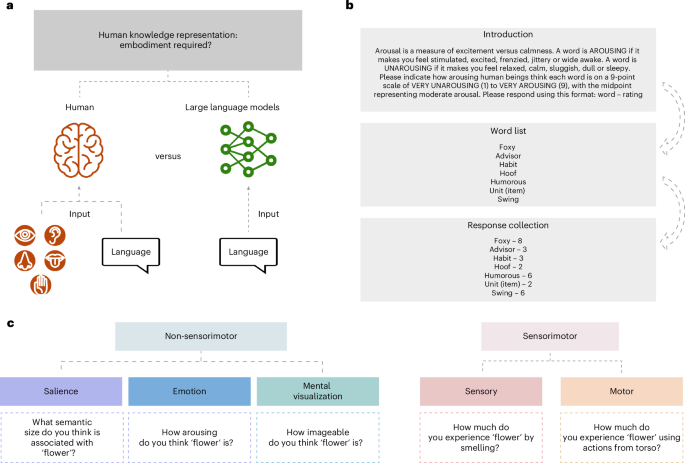

The study examines the collection of data from large language models (LLMs) and human participants, adhering to ethical guidelines from the British Psychological Society and obtaining necessary approvals from Lancaster University. It employs the Glasgow and Lancaster Norms to establish psycholinguistic metrics for English words across various dimensions, focusing on emotional and sensory experiences. The Glasgow Norms, based on 829 participants, include ratings for 5,553 words across nine dimensions, while the Lancaster Norms, from 3,500 participants, encompass sensory and motor dimensions for approximately 40,000 words. The research involves testing multiple LLMs, including GPT-3.5, GPT-4, and Google’s models, using standardized procedures to yield consistent ratings. Extensive analyses, including pairwise correlations and representational similarity analyses (RSA), compare human and model responses. Results support the validity of both norms and emphasize how LLMs reflect collective human conceptual representations. Statistical methodologies ensure robust analyses while mitigating potential biases.

Source link

Large Language Models Lacking Grounding Retain Non-Sensorimotor Aspects of Human Concepts, but Struggle with Sensorimotor Features

Share

Read more