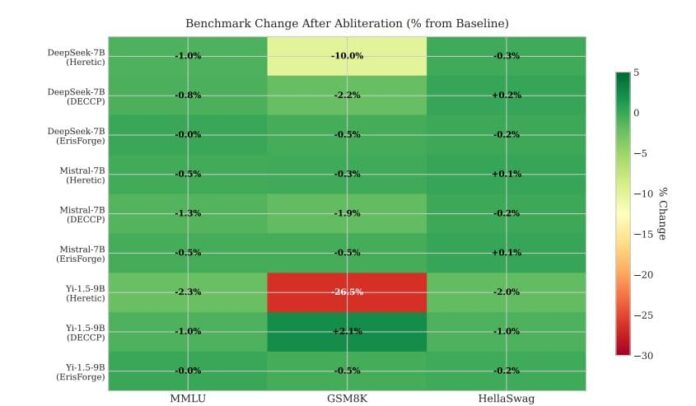

The need to improve large language models (LLMs) while maintaining safety is a growing challenge. Richard J. Young and colleagues from the University of Nevada, Las Vegas, tackle this issue by investigating “abliteration” techniques aimed at selectively removing safety constraints that prevent harmful responses. Their systematic evaluation of four abliteration tools—Heretic, DECCP, ErisForge, and FailSpy—across 16 instruction-tuned models reveals that single-pass methods like ErisForge and DECCP are effective in preserving critical capabilities like mathematical reasoning. The study highlights the trade-offs between suppressing unwanted refusal behaviors and maintaining overall model performance. It indicates that refusal behavior is complex, encoded across multiple layers of the models, and that careful selection of abliteration methods is essential for reliable model evaluations. These findings provide valuable insights for researchers aiming to balance safety and performance in LLMs, ensuring their responsible deployment in various applications.

Source link

Share

Read more