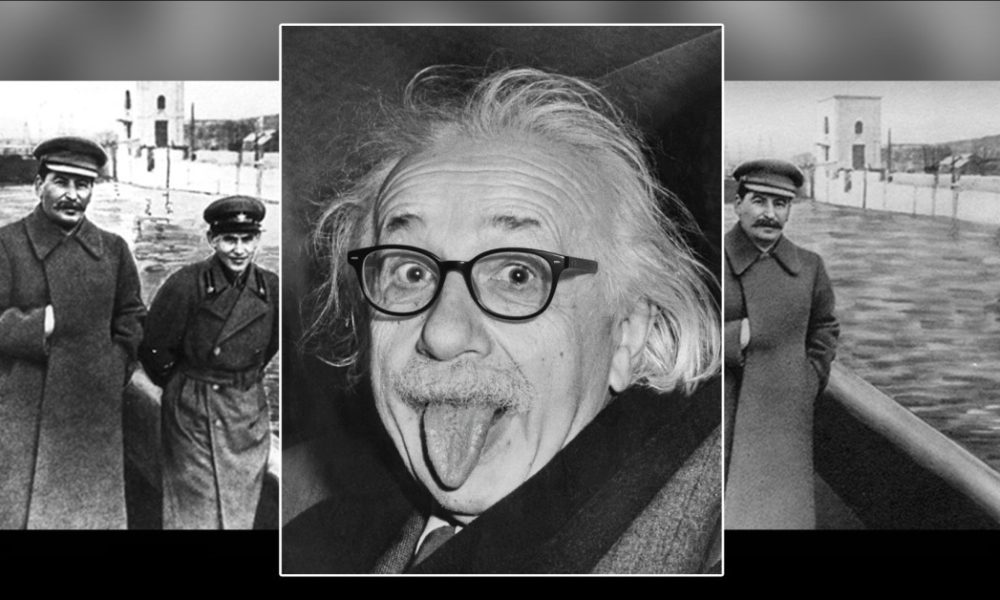

Conversational AI tools like ChatGPT and Google Gemini are being utilized to create subtle deepfakes that alter narratives within images rather than just swapping faces. By modifying gestures, props, and backgrounds, these alterations can deceive both AI detection systems and humans, complicating the identification of real versus manipulated content. Existing concerns about deepfakes often focus on high-stakes applications like non-consensual AI porn or political interference, neglecting the more insidious and lasting effects of subtle manipulations reminiscent of historical examples like Stalin’s photographic edits. Australian researchers have launched the MultiFakeVerse dataset, consisting of 845,826 images showcasing nuanced edits that affect context and emotion. Their findings reveal that current detection systems struggle to identify these subtle changes, demonstrating a blind spot in human and AI perception. As AI’s role in image manipulation grows, the need for improved detection methods against these less obvious alterations becomes crucial to prevent ongoing misinformation.

Source link

Share

Read more