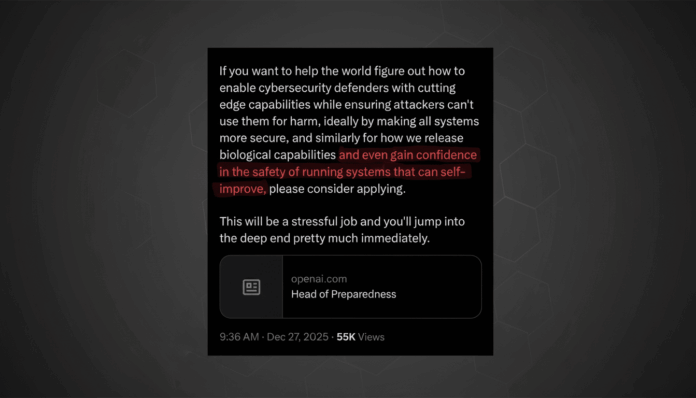

OpenAI is seeking a Head of Preparedness to ensure the safety and risk management of advanced AI systems. This senior executive role, described by CEO Sam Altman as both “critical” and “stressful,” focuses on preventing misuse, guiding model evaluation, product safety, and organizational preparedness. Key responsibilities include red-teaming for biosecurity, stress-testing models for social harms, developing metrics for risk thresholds, and facilitating crisis interventions to mitigate AI-generated misinformation.

Recent wrongful death lawsuits highlight the urgency for robust AI safety measures. The selected candidate will streamline security operations, foster industry collaborations, and align with evolving regulatory landscapes, including the UK’s AI Safety Institute and NIST’s frameworks. The position, based in San Francisco with a salary of $555,000 plus equity, demands a blend of security expertise and product acumen. Success will be measured by reduced incidents, accurate risk refusal systems, and transparent evaluations, reinforcing accountability as AI capabilities develop.

Source link