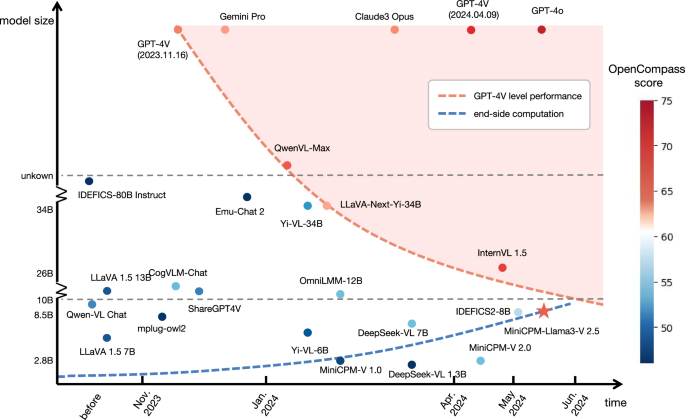

MiniCPM-V is a cutting-edge model incorporating visual processing and language learning capabilities designed for efficient high-resolution image encoding and multimodal interactions. It consists of three primary modules: a visual encoder employing an adaptive encoding strategy, a compression layer with perceiver resampling, and a large language model (LLM) for text generation. MiniCPM-V addresses challenges in computational efficiency and effectiveness by optimally partitioning images into slices, significantly reducing visual tokens while maintaining encoding performance. The model undergoes a three-phase training process, including pre-training, supervised fine-tuning, and alignment, enhancing its multimodal comprehension in over 30 languages. MiniCPM-V’s robust capabilities are showcased through rigorous evaluations across various benchmarks, outperforming both open-source and proprietary models with fewer parameters. Additionally, the implementation of memory optimization and neural processing unit (NPU) acceleration facilitates deployment on edge devices, ensuring efficient performance across various platforms, making MiniCPM-V more accessible for real-world applications.

Source link

Share

Read more