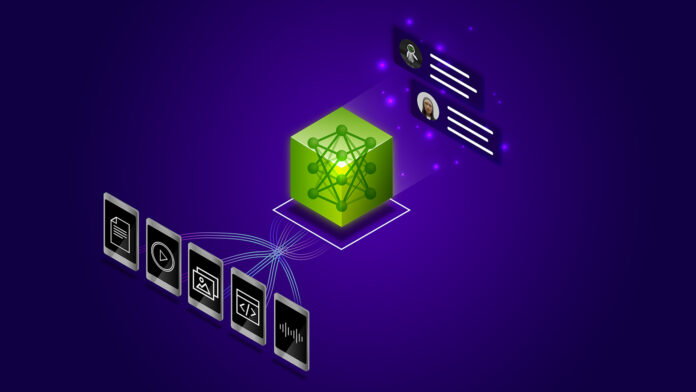

The conversion of extensive text libraries into numerical embeddings is crucial for generative AI, powering technologies like semantic search and recommendation systems. Generating embeddings for large datasets presents scalability challenges, and Apache Spark excels in handling massive data processing jobs. However, generating embeddings is computationally intensive, necessitating accelerated computing, typically involving complex GPU management.

This solution demonstrates deploying a distributed Spark application with serverless GPUs on Azure Container Apps (ACA). This architecture includes a Spark front-end controller for job orchestration and multiple GPU-accelerated worker applications for data processing. Azure Files provide a shared storage layer for data access.

With a streamlined setup, users can easily develop and test distributed applications. By leveraging this serverless model, organizations achieve high throughput and low latency while avoiding infrastructure complexity. This adaptable architecture can also be elevated using NVIDIA NIM microservices for enhanced performance. Explore more in the NVIDIA/GenerativeAIExamples GitHub repository.

Source link