🌟 Revolutionizing AI Agent Benchmarks for Trustworthy Evaluation 🌟

As AI transitions to mission-critical applications, the need for robust evaluation benchmarks is paramount. Our exploration dives into the nuances of recent benchmarks, exposing their inherent flaws and proposing solutions to ensure reliability.

Key insights include:

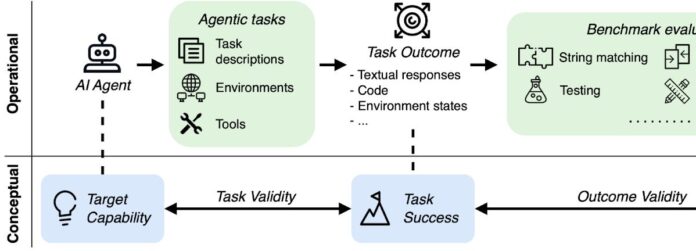

- Complexity of AI Benchmarks: Unlike traditional benchmarks, AI agent evaluations often use tricky simulators with no clear ‘gold’ answers.

- Trustworthy Principles: We introduce two validity criteria critical for enhancing benchmark accuracy:

- Task Validity: Is the task solvable solely by possessing the required capability?

- Outcome Validity: Does the result accurately indicate task success?

Findings from our assessment reveal:

- 70% of ten benchmarks demonstrate significant flaws.

- 80% lack transparency regarding known issues.

Join us in our mission to refine AI benchmarks! Explore the full checklist and engage with our findings. Let’s build a future where AI’s true potential is trustworthily measured.

🔗 Share your thoughts and connect with us!