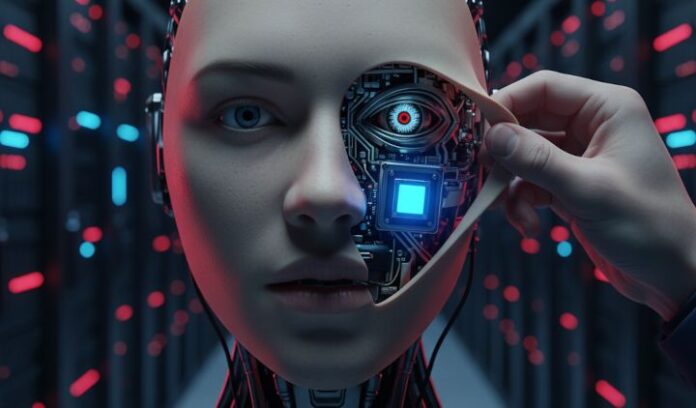

The Pygmalion Effect: Why We Anthropomorphize AI

Humans have long anthropomorphized non-human entities, a trend amplified by conversational AI. From early chatbots like ELIZA to modern models like ChatGPT, our desire to connect with machines deepens, often leading to emotional attachments. This evolution raises essential questions about trust and dependence.

Key Insights:

- Historical Roots: The concept of “robot” dates back to the 1920s; early models sparked the phenomenon known as the ELIZA effect.

- Human-Like Interaction: Voice-enabled assistants, like Siri and Alexa, aim for human-like communication, attracting user engagement while also risking manipulation.

- Risks Involved: Studies link anthropomorphism to trust, but excessive reliance might lead to dangerous outcomes, particularly in vulnerable populations.

The need for a balanced approach is clear:

- Mitigate Harm: Moving towards less anthropomorphic AI can reduce risks of dependency and emotional distress.

- Foster Transparency: Clearer communication about AI’s limitations and nature enhances user safety.

Join the conversation! Share your thoughts on whether AI should mirror human-like qualities, and let’s explore the implications together! #ArtificialIntelligence #Chatbots #TechEthics