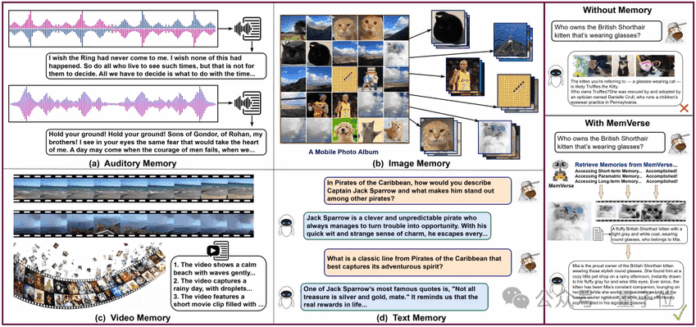

Shanghai Artificial Intelligence Laboratory has introduced MemVerse, the first universal multimodal memory framework designed for AI agents, addressing limitations like “modality isolation” and slow responses. This innovative framework enables agents to utilize cross-modal memory across images, audio, and video, establishing “growable, internalizable, second-level response” lifelong memory. Unlike traditional AI memory, which is primarily text-based and lacks understanding of spatiotemporal logic or cross-modal semantics, MemVerse implements a three-layer bionic architecture. This includes a central coordinator for scheduling, short-term memory for coherent conversations, and long-term memory that categorizes core, episodic, and semantic memories, effectively reducing hallucinations. The unique “parameterized distillation” technique enhances retrieval speed by over 10x. Benchmark results showcase significant improvements, with the GPT-4o-mini model rising from 76.82 to 85.48 on ScienceQA. With 90% token usage reduction through memory compression, MemVerse is now fully open-source, revolutionizing AI memory management.

Source link

Shanghai AI Lab Unveils MemVerse: Empowering Agents with a “Hippocampus” for Enhanced Multimodal Memory

Share

Read more