Unlocking AI Efficiency: The Power of Knowledge Distillation

DeepSeek, a lesser-known Chinese AI company, has made headlines this year with its R1 chatbot, drawing attention for its performance that rivals industry giants—yet at a fraction of the cost. The fallout? Western tech stocks, including Nvidia, faced unprecedented losses.

Key Insights:

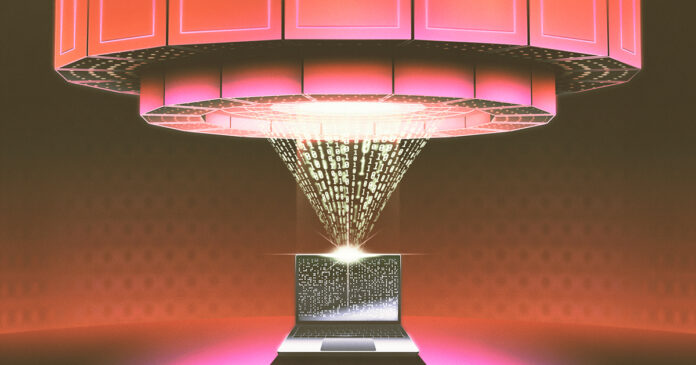

- Innovation through Distillation: Touted as the future of AI efficiency, knowledge distillation allows smaller models to learn from larger ones.

- Real-World Applications: Companies are turning to distillation for cost-effective AI solutions. Google’s DistilBERT exemplifies its widespread industry adoption.

- Foundation of Distillation: Originally proposed by AI pioneers like Geoffrey Hinton, the concept offers a transformative way to improve model training.

Despite allegations of impropriety in acquiring knowledge from OpenAI, the truth is—distillation is a standard practice, commonly utilized for enhancing model performance.

🌟 Engage with Us! Share your thoughts on how distillation could shape the future of AI! #AI #TechInnovation #KnowledgeDistillation