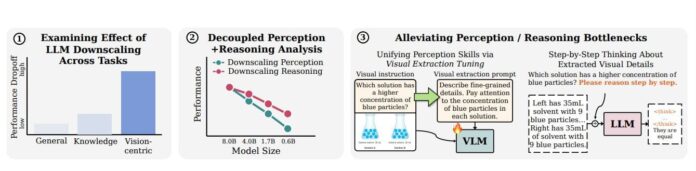

Recent research by Mark Endo and Serena Yeung-Levy highlights the importance of efficient systems in artificial intelligence, particularly in multimodal learning that integrates vision and language. Their study reveals that reducing the size of large language models (LLMs) significantly compromises visual processing capabilities, more so than language skills. They introduced a novel method called Extract+Think, which focuses on training the model to extract essential visual details prior to reasoning. This innovative two-stage approach enhances performance without needing extra visual data. Findings indicate that visual extraction tuning effectively addresses the bottlenecks caused by model downscaling, allowing smaller multimodal models to outperform larger counterparts. The study serves as a crucial step in understanding the impacts of model size on multimodal capabilities and sets a new standard for performance efficiency. Future research will explore further downscaling effects and the comparative impacts of visual and language component reductions. For more details, visit the ArXiv link.

Share

Read more