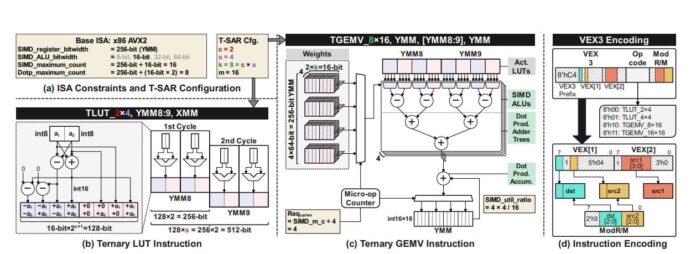

As demand for large language models (LLMs) grows, deploying AI on resource-constrained edge devices using CPUs presents a challenge. Researchers Hyunwoo Oh, KyungIn Nam, and Rajat Bhattacharjya introduce T-SAR, a groundbreaking framework for scalable ternary LLM inference on CPUs. By dynamically generating lookup tables (LUTs) within CPU SIMD registers, T-SAR reduces slow, power-draining memory access. This innovation enhances performance, achieving latency improvements of up to 24.5 times and significantly boosting energy efficiency compared to existing platforms. The approach redefines ternary quantization, optimizing resource savings while maintaining accuracy. Additionally, T-MAC—a complementary, CPU-focused method—utilizes efficient table lookups and minimizes memory access, attaining speedups of over 2.5 times against traditional CPU implementations. T-SAR’s ability to overcome memory bottlenecks and maximize data-level parallelism heralds a new era for efficient LLM deployment on a broader range of devices. For further insights, explore T-SAR’s complete implementation on arXiv.

Source link

Share

Read more