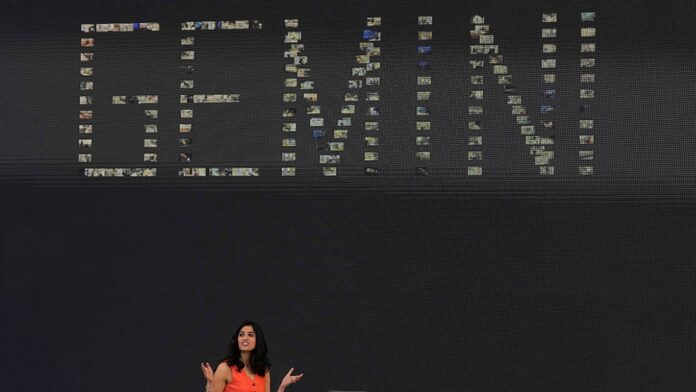

Artificial Intelligence (AI) is increasingly utilized for everyday math tasks, but a recent study reveals concerning accuracy levels. The Omni Research on Calculation in AI (ORCA) indicates that AI chatbots have a 40% chance of delivering incorrect answers on basic calculations. Five leading models were tested on 500 prompts, with Gemini 2.5 from Google topping the leaderboard at 63% accuracy, closely followed by Grok-4 (62.8%). ChatGPT-5, Claude 4.5, and DeepSeek V3.2 lag behind with scores of 49.4%, 45.2%, and 52%, respectively.

Performance varies: Gemini leads in math and conversions at 83%, while physics scores a dismal 35.8%. DeepSeek scored merely 10.6% in biology and chemistry. Experts advise users to double-check AI outputs using calculators or trusted sources, particularly for critical calculations, as AI models struggle with computation and precision issues. Understanding these limitations is crucial for trusting AI tools in mathematical tasks.

Source link