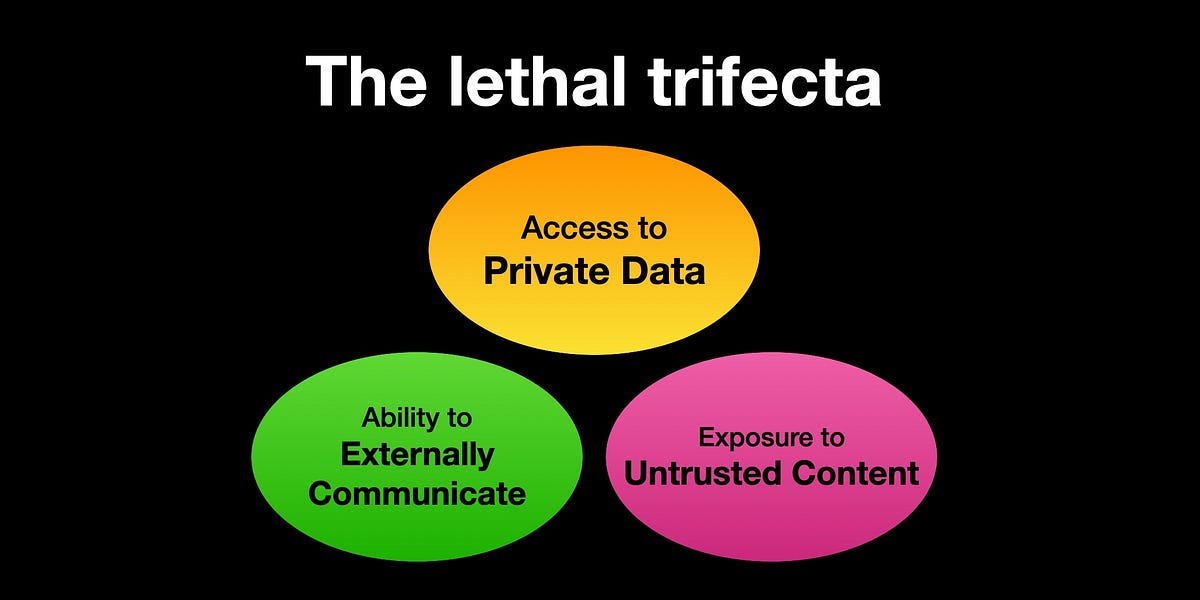

This newsletter discusses critical security considerations for AI agents, particularly regarding prompt injection vulnerabilities. It introduces a “lethal trifecta” comprising access to private data, exposure to untrusted content, and external communication capabilities that can lead to malicious data exfiltration. Multiple examples of prompt injection exploits across various platforms highlight this risk, emphasizing that LLMs (Large Language Models) cannot reliably distinguish between trusted and untrusted instructions.

Additionally, the newsletter reviews a paper outlining design patterns to enhance the security of LLM agents against these attacks. These patterns suggest limiting agents’ capabilities post-exposure to untrusted content to safeguard against unintended actions. Google’s approach to AI security acknowledges similar risks while advocating for robust user control and observable agent actions. Despite advancements, achieving absolute security remains elusive, highlighting the necessity for careful consideration when integrating AI tools. The newsletter concludes with various papers and insights that collectively stress the importance of controlling AI behavior and mitigating risks effectively.

Source link