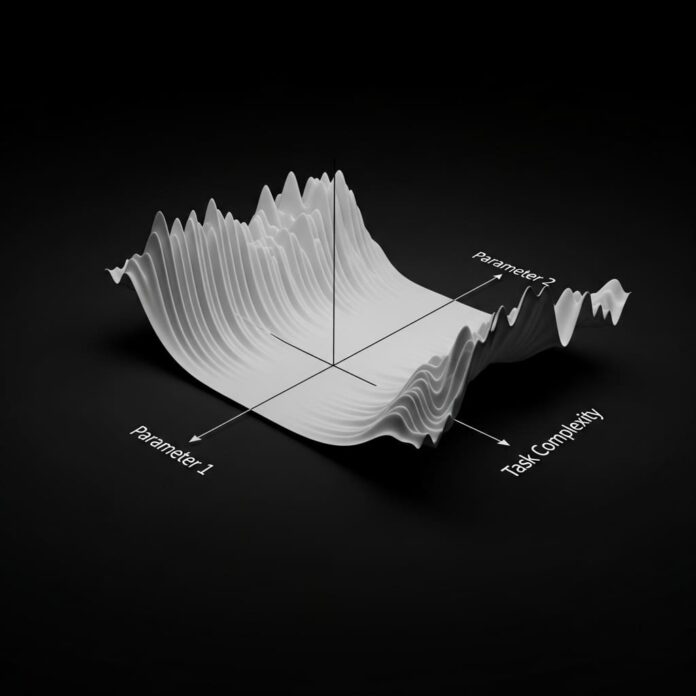

Researchers are focused on addressing error rates in large language models (LLMs) during deterministic tasks like arithmetic. A study by Suvrat Raju and Praneeth Netrapalli presents a novel model explaining these inaccuracies, attributing them to the accumulation of small errors in the attention mechanism exceeding a critical threshold. This two-parameter framework connects task complexity to accuracy through an inherent noise rate and plausible erroneous token predictions.

Testing with models like Gemini 2.5 Flash, Gemini 2.5 Pro, and DeepSeek R1 revealed strong alignment between predicted and actual accuracy across various tasks. The findings challenge the notion of a “collapse of reasoning,” providing a quantitative formula for error prediction instead. Importantly, the researchers offer insights into prompt engineering that can minimize error rates. This research not only enhances understanding of LLM performance but also opens pathways for improving model reliability, paving the way for more effective and accurate language models.

Source link