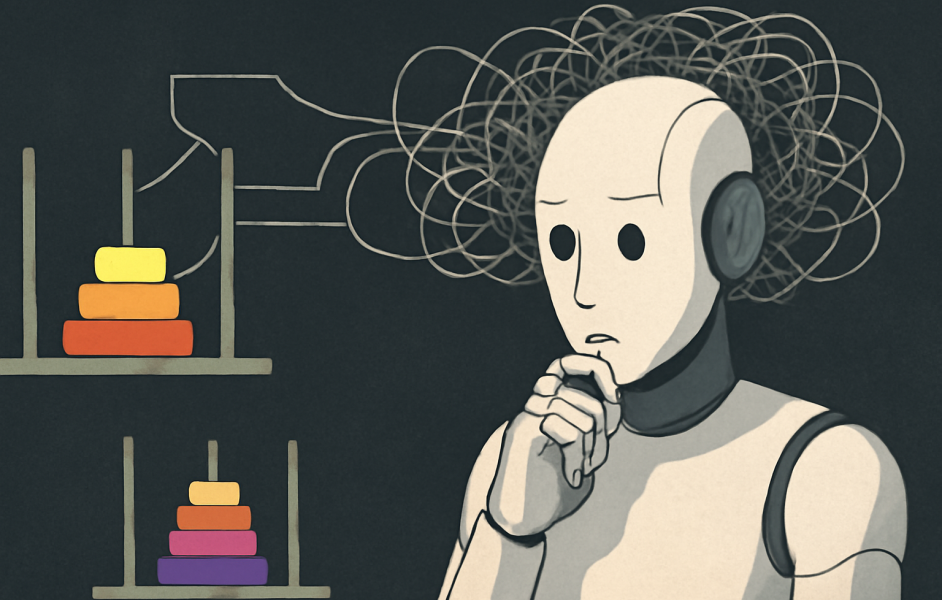

Recent advancements in artificial intelligence, particularly through Large Language Models (LLMs) and Large Reasoning Models (LRMs), have transformed how machines process and generate text. While LLMs like GPT-3 excel at generating human-like responses, they often overcomplicate simple tasks and falter on complex problems. A study by Apple explored this phenomenon using controlled puzzles to assess reasoning capabilities. Findings revealed that LLMs perform better on low-complexity tasks, while LRMs excel at medium-complexity challenges. However, both models struggle with high-complexity scenarios, often leading to reduced reasoning effort. This behavior stems from their training on diverse datasets, which may encourage verbosity for simple problems and hinder generalizable reasoning for complex ones. The implications suggest a need for new evaluation methods and improvements in AI reasoning, emphasizing the importance of developing systems that can adaptively tackle problems of varying complexities, similar to human reasoning. Overall, the study underscores the gap between simulated reasoning and genuine understanding in AI.

Source link

Share

Read more